Smart Voice Control Calendar

A low-cost, high potent, voice control smart calendar

By Shulu Wan (sw889), Yiting Wang (yw883)

Project Objective:

Nowadays, people live in a rapid developing society with tight schedule, especially for those university students, who take many classes including lots of assignments, lab projects and exams. Therefore, a tiny smart calendar, with highly potent and specific useful function, is needed. The smart calendar, which only focuses on recording and displaying students schedule, can remind and assist students to better manage their time. It should be small enough that can be placed on bedside table like humidity monitor, and be cheap that affordable for all students. With its simple operation and voice control function, which delivers more interactive content, the smart calendar becomes more attractive.

Introduction

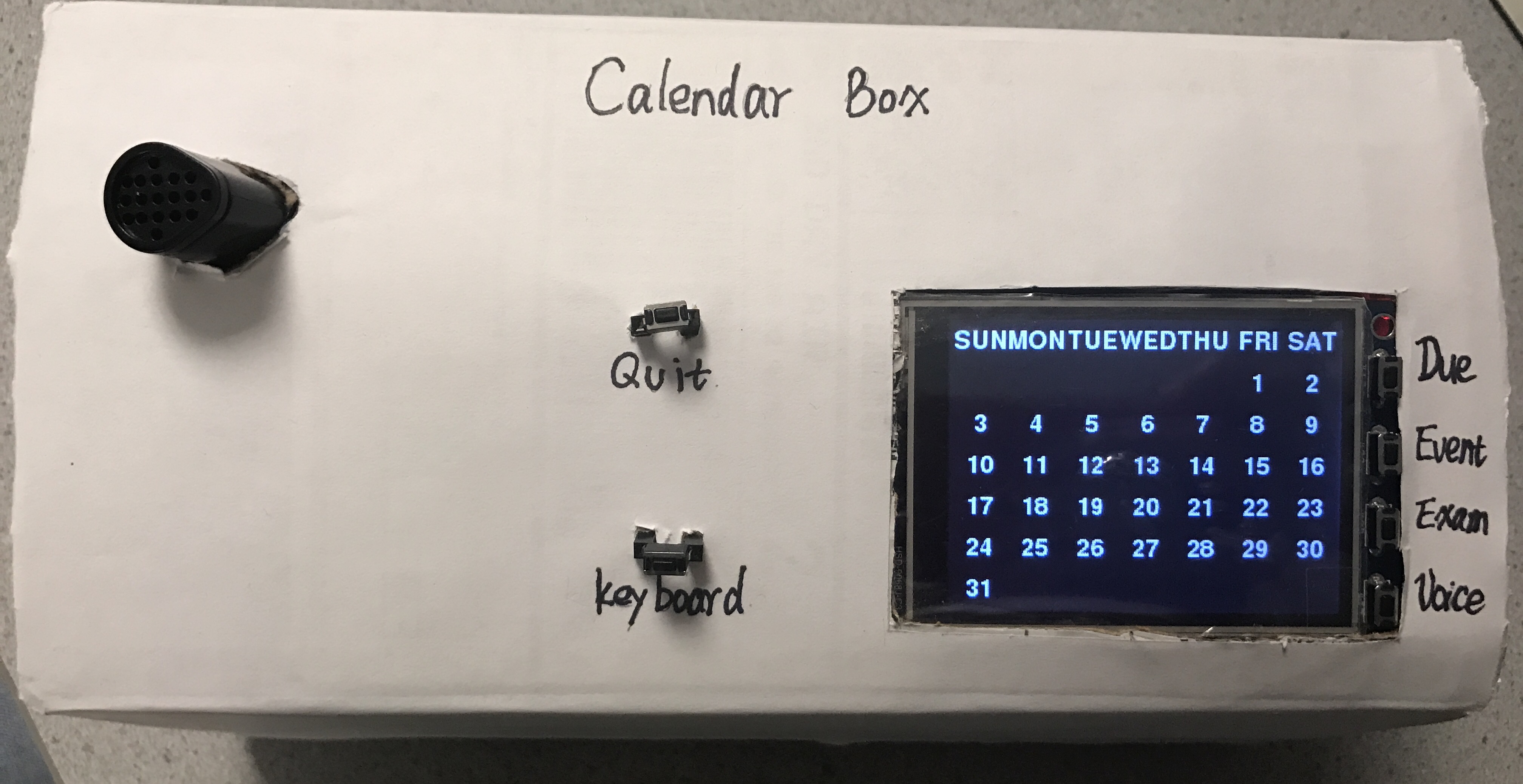

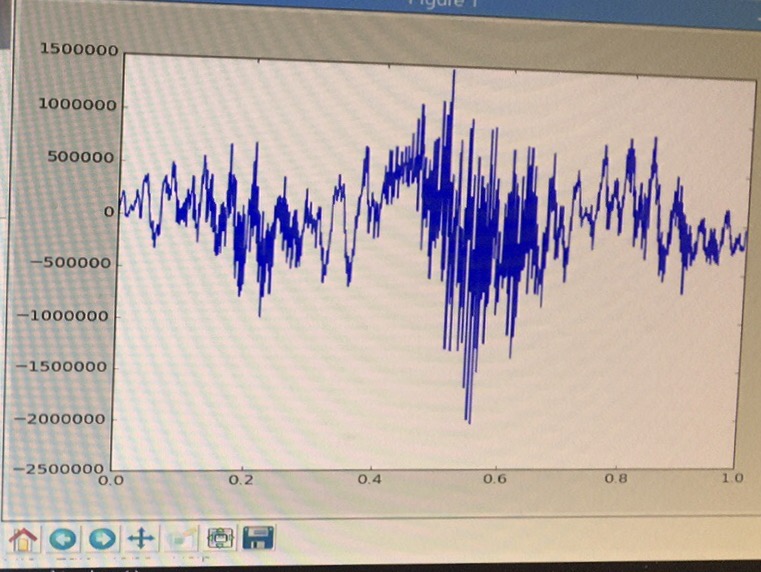

The goal of this project is to develop a simple operation smart calendar with voice control function. There are two major progresses: building the calendar and adding the voice control function. The calendar is built on the Raspberry Pi. The PiTFT displays the calendar in December 2017. By pressing the buttons besides the PiTFT, users add events or activities on everyday, and launch the virtual keyboard for inputting the specific time and issue name based on the input template “XXX ; 10:00 pm … ”. The implemented microphone through the USB cable fulfills some voice control functions. By pressing the last button (number 27 GPIO pin), users speak the command to the microphone, and the screen returns corresponding information. For example, user speaks “24 hours,” and the smart calendar would return all the deadline information within 24 hours as a reminder. The build in WiFi function assists the calendar to receive current time for the deadline reminding function. One extra button (number 13 GPIO pin) was installed for force quit the program.

Drawings

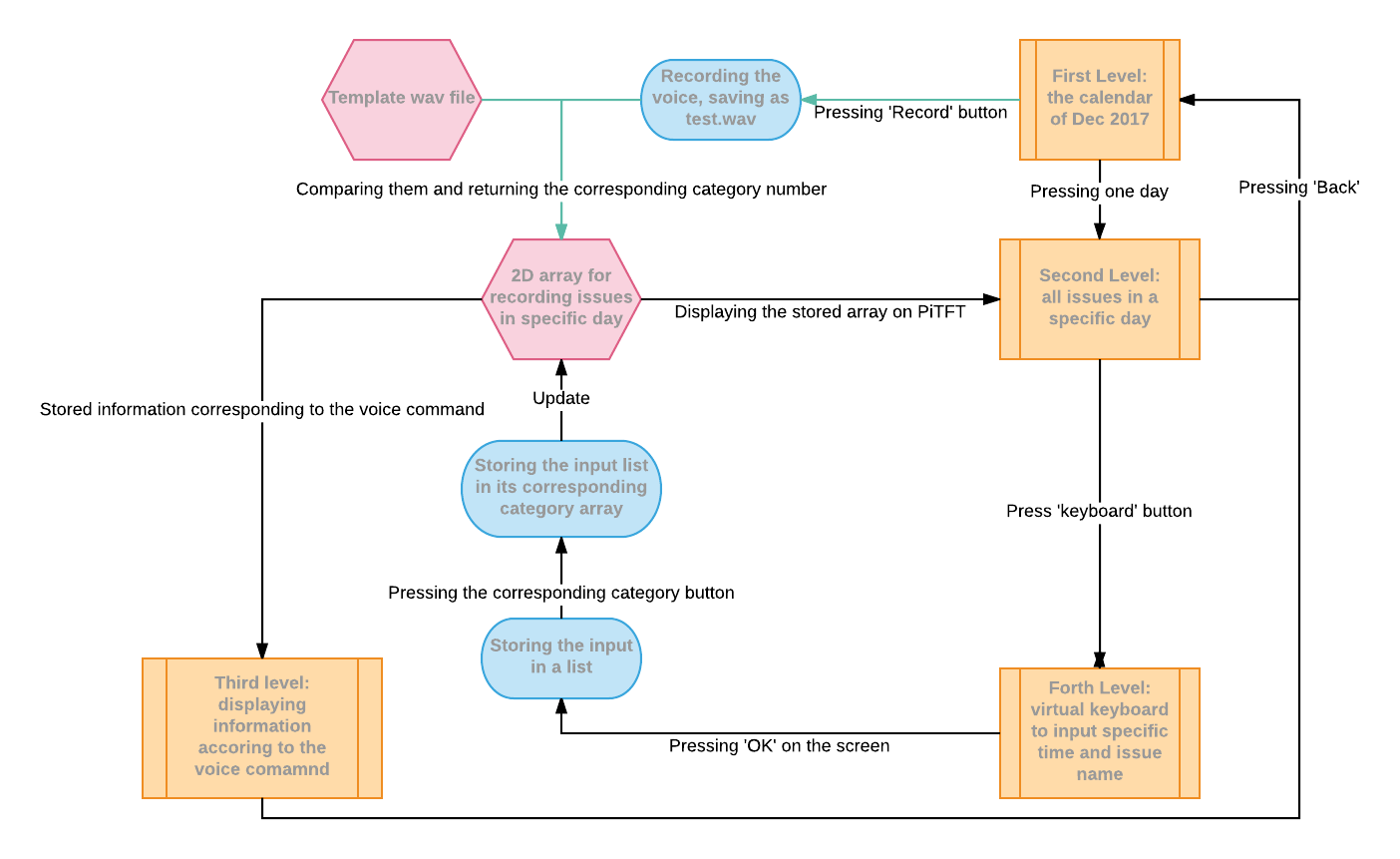

Flowchart of our project

Design

Calendar Part:

The calendar part is the foundation of this project. We implemented four different levels to achieved the framework of the calendar. The first level of the calendar displays the whole month (December 2017) on the PiTFT. The second level is for recording issues to the background. Users press #17 button to input information about dues, #22 button to input information about events, and #23 button to input information about exams. The third level displays the summarized issues information according to the voice control command. The forth level displays the virtual keyboard for user’s input in the second level. The specific instruction of the smart calendar is explained as following.

After clicking a particular day in the first level, the calendar would enter into the second level, the schedule of that day including the information of the date, dues, events and exams and a ‘back’ button to return to the first level when users finish inputing the specific issues. In the second level, users press the second outside button (number 26 GPIO pin) to enter into the forth level, where the virtual keyboard is displayed. Users input the specific time and issue name based on the input template as following “5725 LAB3 ; 10:00 pm … ”. User’s input would be synchronously displayed at the top left corner of the screen. After finishing inputting, users press ‘OK’ button on the screen to go back to second level. Once going back to the second level, users would decide which category to record the information they just inputed. The first button (number 17 GPIO pin) corresponds to event named Due with deadline (like “5725 lab 4; 12:00 AM”). The second button number 22 GPIO pin) corresponds to event named Event which users must be attended (like “graduation ceremony; 12:00 PM”). The third button (number 23 GPIO pin) corresponds to event named Exam include the quiz, prelim and final (like “5725 final; 2:00 PM”). Users could keep pressing buttons, inputting information and saving inputs as much as they want in the level two, or they could re-enter into the second level from the first to keep doing so once they need to add more issues in that date. These inputting information would be recorded in the background and be used in the third level. Going back to the first level, users could press the #27 button to do the voice control. With user’s voice control, the program enters into the third level to give back some special informations as “dues within 24 hours,” “dues within three days,” “all dues information,” and “all exams information.” The third level also includes the ‘back’ button to return to the first level.

We built a python program based on the lab three project to fulfill above functions. During the calendar part design, we suffered some problems and finally solved them. How to receive user’s input is the major problem we suffered. At the beginning, we used the ‘raw_input()’ to implement the function that user could input the details of different events. However, we should go back to console window to input something. And when the calendar was showed on the PiTFT, after we pressed the button telling the calendar that I wanted to input, the calendar would quit to console. To address this issue, we defined every letter, number and punctuation on the keyboard, and used pygame sub-function ‘pygame.key.get_pressed()’ to store multiple keypress. We displayed user’s input on the screen with ‘.join()’ to erase the common between each character. We also added ‘backspace’ as deleting a single letter, so that users would not worry about pressing a wrong key. Furthermore, in order to fulfill the purpose of “great” embedded system, we removed the keyboard and built a virtual one in our system so that user could launch it on the screen by pressing one button. In general, with a series of improvement, we optimized the input method to make our smart calendar be more user-friendly.

Some other issues we addressed in the calendar part are listed below. When we entered the second level of the calendar, some words on the PiTFT would flash all the time. Then we found out because we wrote different part of display as different functions with ‘flip()’ command in every function, it would keep flashing. So we just flip everything together to solve this problem.

We first tried to use append function to record user’s input, it returned wrong results since our main function is a while loop and the record list would keep recording every 0.1 second (we used time.sleep(0.1)). So we built a fixed size list to record inputs.

We first considered every list that records the dues, events, or exams to be a 1D list, whose dimension is the number of day. Later, we figured out it could be possible that user has more than one due in each day. Therefore, we modified it to be 2D array, whose one dimension is the number of day, the other is the list of all dues (or events, exams) in that day. However, when user input more than one due, the displaying location of the due list keeps changing along with the size of the input. To solve this problem, we replaced a.get_rect(center=(x,y)) with a.get_rect(top_right=(x,y)).

Voice Control Part:

In the first level, where the PiTFT displays the calendar in December 2017, the #27 button would fulfill the voice control function. By pressing the button, the microphone records user's’ voice requirement. Comparing the recorded data with the template, the PiTFT displays corresponding information on the screen. We built four kinds of commands for voice control: If user says “twenty-four hours”, PiTFT would show all dues information within 24 hours; if user says “three days”, PiTFT would show all dues within 3 days; if user says “all due”, PiTFT would show all dues within the whole month; if user says “exams”, PiTFT would show all the exams including quiz, prelim and final within the whole month.

We tried lots of design and testing in this part. To check if the microphone works on our RPi, we used command arecord -l. We figured out our first microphone didn’t work and changed a new one. At the beginning, for achieving the voice control, we used arecord--a command-line sound-file recorder for the ALSA sound-card driver. It successfully transferred a few words recorded from the microphone to a wav file. However, since it’s a command-line recorder, we tried to implement it into the python file by using FiFo as we studied from lab1, but we failed.

Since we couldn’t figure out how to implement arecord in python file, we found another method that utilizing pyaudio, which provides Python bindings for PortAudio that allows user easily use Python to play and record audio on a variety of platforms. There is a sample code for chunks recording from pyaudio’s site. It utilizes CHUNK, FORMAT, CHANNELS, RATE to records the voice input in a wav file. To better fulfill this code, we understanded the meaning of each variable. "RATE" is the "sampling rate", i.e. the number of frames per second. "CHUNK" is the (arbitrarily chosen) number of frames the (potentially very long) signals are split into in this example. Each frame will have 2 samples as "CHANNELS=2." Size of each sample is 2 bytes, calculated using the function: pyaudio.get_sample_size(pyaudio.paInt16); therefore size of each frame is 4 bytes. For loop executes int(RATE*RECORD_SECONDS/CHUNK) time, RATE*RECORD_SECONDS is the number of frames that should be recorded. Since the for loop is not repeated for each frame but only for each chunk, the number of loops has to be divided by the chunk size CHUNK.

In our project, we assigned RATE=44100, CHUNK=1024. To simplify and speed up our project, we set CHANNELS=1, RECORD_SECONDS=1. We also wrote a for loop in the recording python file (has already been comment out) to check the input_device_index setting for the audio.open command.

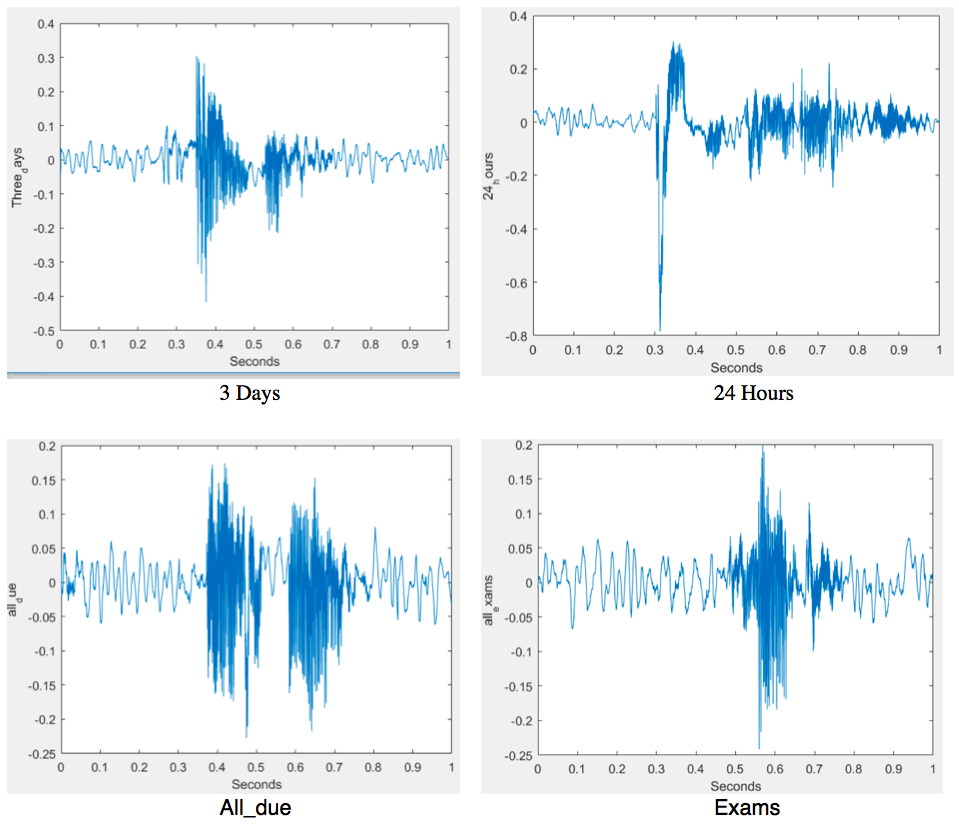

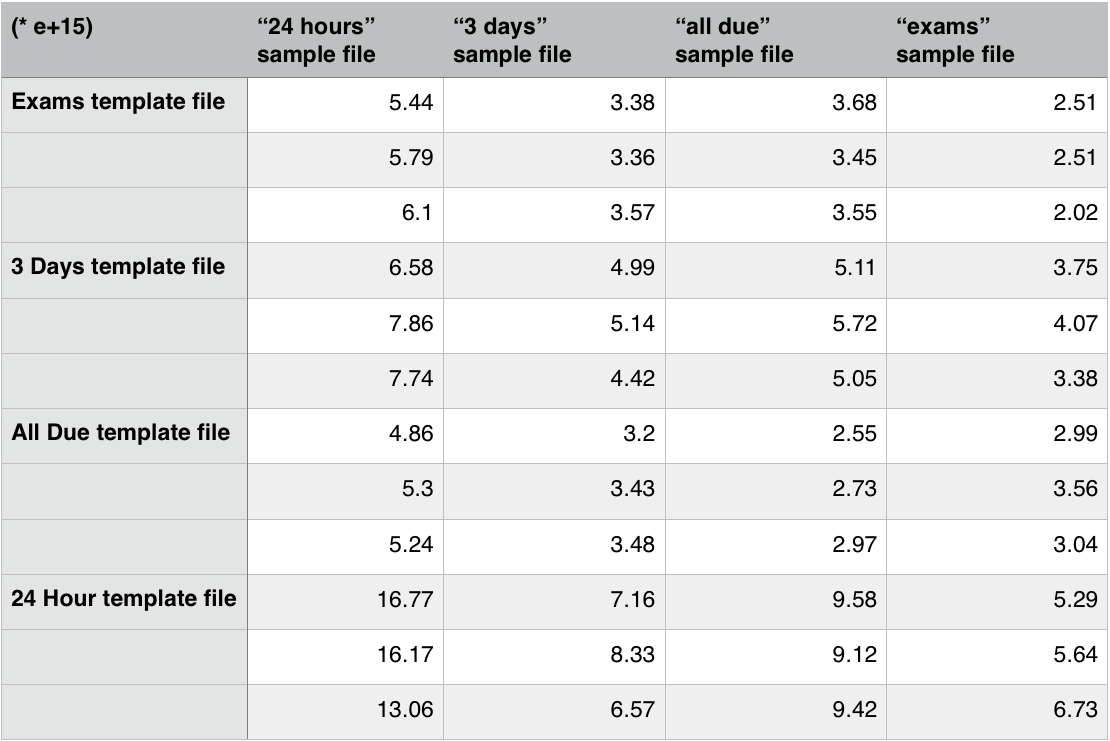

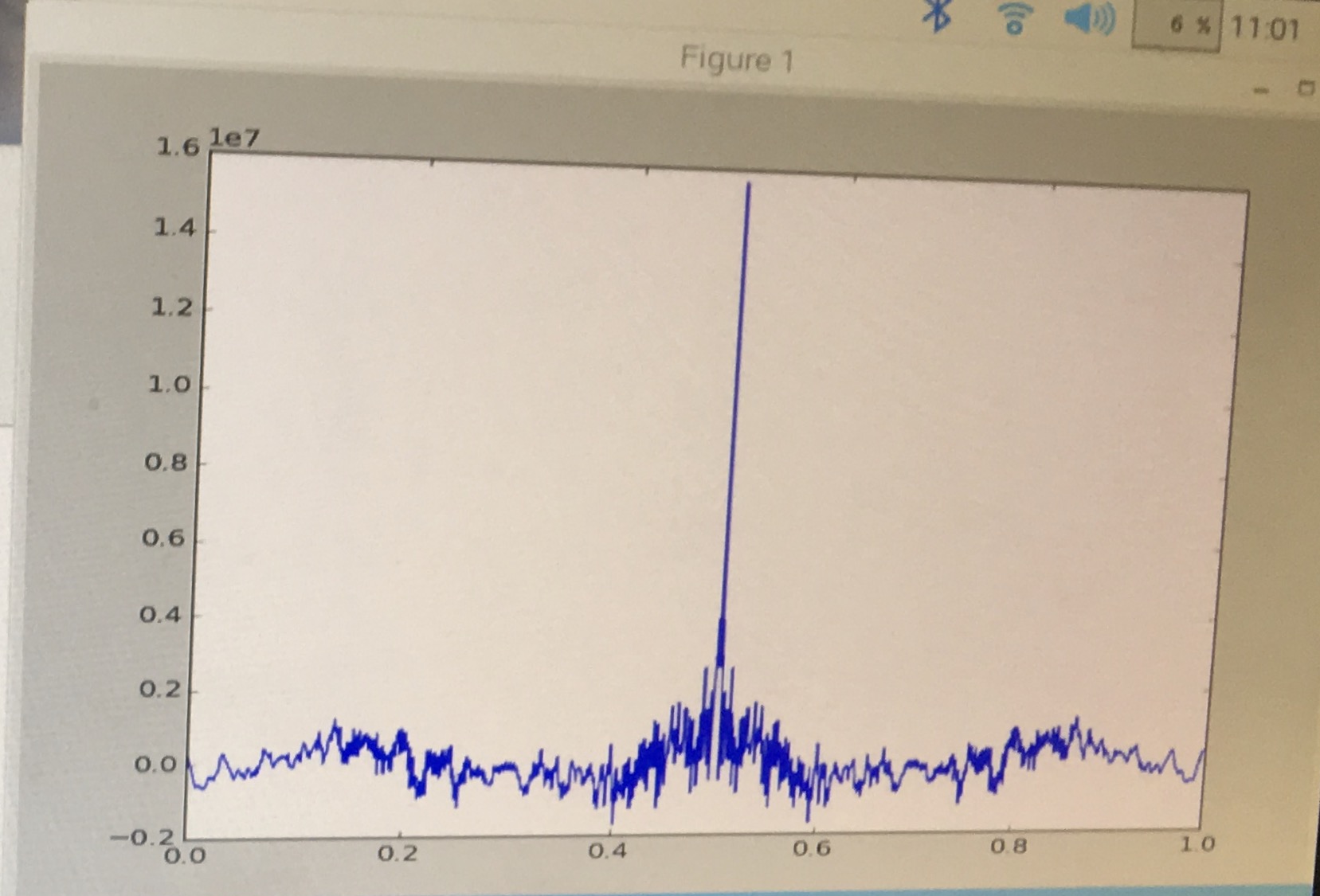

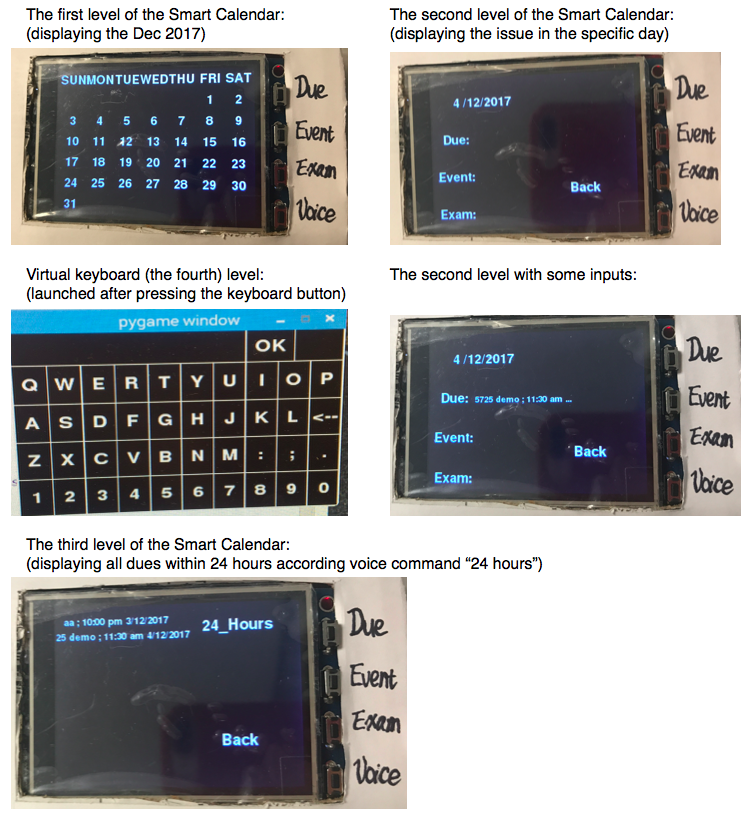

After we got chunks from microphone and recorded it into a wav file, we used Matlab to get the time domain plotting of it. Based on the plotting of four commands, we figured out the “24 hours” plot and “exams” plot are totally different with all others, but “all due” and “3 days” are similar to each other. We assumed it might cause some error to distinguish commands of “all due” and “3 days” in the following steps.

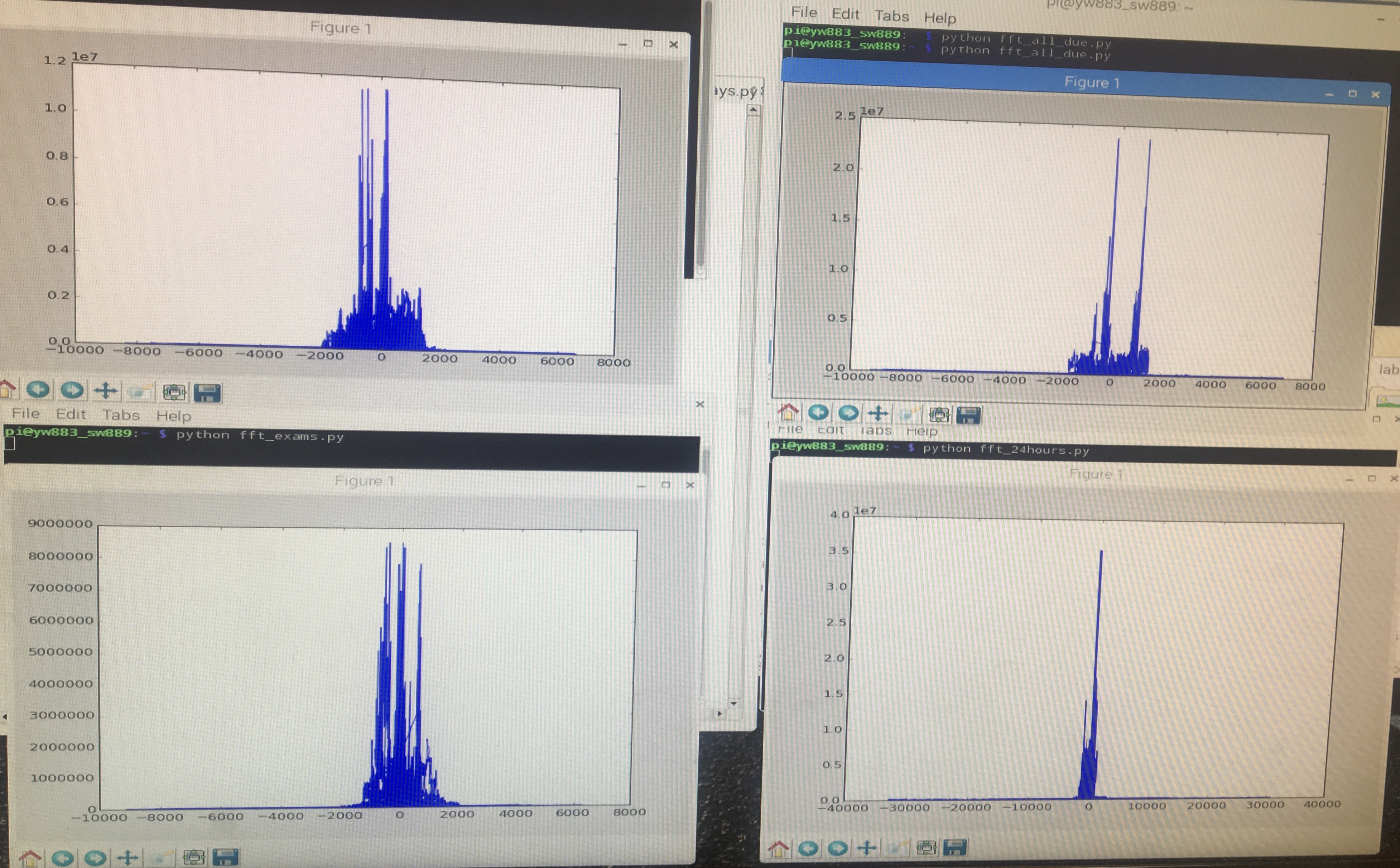

With previous analyze and assumption, we plotted real-time sound in python by implementing Fourier transform on it. We imported fft from scipy.fftpack, and applied it on both template and sample wav files. We did the one dimension cross correlation between them and find out the maximum value from the results.

We applied previous calculation on four kinds of voice commands. Based on the maximum value of different commands, we defined the range of the maximum value and its corresponding category. Comparing with the “24 hours” template, if the maximum return value is larger than 8e+15, the sample voice command matches the “24 hours” command. Comparing with the “exams” template, if the maximum return value is smaller than 3.5e+15, the sample voice command matches the “exams” command. Comparing with the “all due” template, if the maximum return value is larger than 2.5e+15 and smaller than 3e+15, the sample voice command matches the “all due” command. Comparing with the “3 days” template, if the maximum return value is larger than 3.5e+15 and smaller than 5.2e+15, the sample voice command matches the “3 days” command. According to our testing results and the estimated range, the commands of “all due” and “3 days” are indeed hard to distinguish, which verified our previous assumption.

The principle of the voice control part is to do the signal analyze. To better distinguish “all due” and “3 days,” we tried many methods. Even though our attempts were not perfect to solve this distinguish problem, we could conclude our calculation method was desired, and the issue was caused by the noise from the audio file, which could be addressed by implementing machine learning techniques in our future works.

Some attempts we tried to make the voice control be more precise are as following. Even they are not very useful for our project, but still are some good ideas. We first tried to do the normalization on the result of cross correlation. We calculated the autocorrelation array of the template file and sample file, and got the autocorrelation coefficient which is the maximum value of autocorrelation array. We did the normalization ( Pxy(i) / sqrt(Pxx(0)*Pxy(0)) ) on every element in the cross correlation array, so that the maximum value of the normalization array should be the result that we want. In theory, if the contents of template file and sample file are matched, the absolute value of this result should be within 0.9 to 1. However, we actually received 0.6, so we concluded the problem was caused by audio itself, but not by the calculation method we used. We also tried to do the normalization before cross correlation. It is still not very useful for our project. The results are shown in the testing part.

One normal issue we solved in this part was the recording noise was too loud to clearly deliver the content. It might be the problem of Alsamixer setting. Alsamixer is a system setting of microphone. We tried to increase the volume setting of Alsamixer to 100% to solve this problem.

Testing

Result

We implement a highly potent smart calendar on Raspberry Pi. It is a good embedded system with virtual keyboard, touch screen and voice control function. The microphone successfully records user’s command and the calendar successfully returns corresponding information. It is small that can be placed on the bedside table, and costs $43 that affordable for all students. The results are shown as below.

Conclusion

Overall, our final project was accomplished successfully. We successfully build the embedded smart calendar with virtual keyboard, touch screen and voice control function. We implemented the knowledge we learned from the course, and also gained knowledge outside this course, like signal processing, voice waveform plotting, voice control and waveform comparison. We did several tradeoffs while we implement these functions to improve the data and calculation accuracy. We met several difficulties, and gradually solve all the problems and bugs. Through this project, we gained al lot of experience and learned a lot. All we learn and acquire from this project are valuable and precious for future studying in embedded operating system area. Finally, we are grateful for the assist we received from our professor and TAs.

Future Work

For voice control part, we will use Mel Frequency Cepstrum Coefficient (MFCC) to analyze the audio files, which will provide more accurate eigenvalues of voice. We will also try to implement machine learning to train the voice command. Morevoer, in current version, the calendar can only recognize Yiting's voice. In the future, we will add a voice recognition function. When a user uses this calendar at the first time, they will be required to record their voice as template files, such that the calendar can recognize his/her voice.

For calendar part, we will add the different years and months, and design some virtual button to flip over the events that user input in. In this way, we will not worry that screen is too small to show all the events.

Work Distribution

Shulu Wan

sw889@cornell.edu

Implemented the calendar; Researched on recording voice; Worked on the website and report.

Yiting Wang

yw883@cornell.edu

Implemented the calendar; Built the virtual keyboard; Implemented the voice control; helped to work on the report.

Parts List

- Raspberry Pi: $35.00

- Microphone: $8.00

Total: $43.00

References

Pyaudio InstructionUseful Pyaudio Sample Coding

Pygame Keyboard Document

Audio Recording Document

Cross Correlation Calculation

Mel-frequency cepstrum Introduction

R-Pi GPIO Document

Code Appendix

© 2017 Shulu Wan, Yiting Wang. All rights reserved.