Entertaining Humanoid Robot

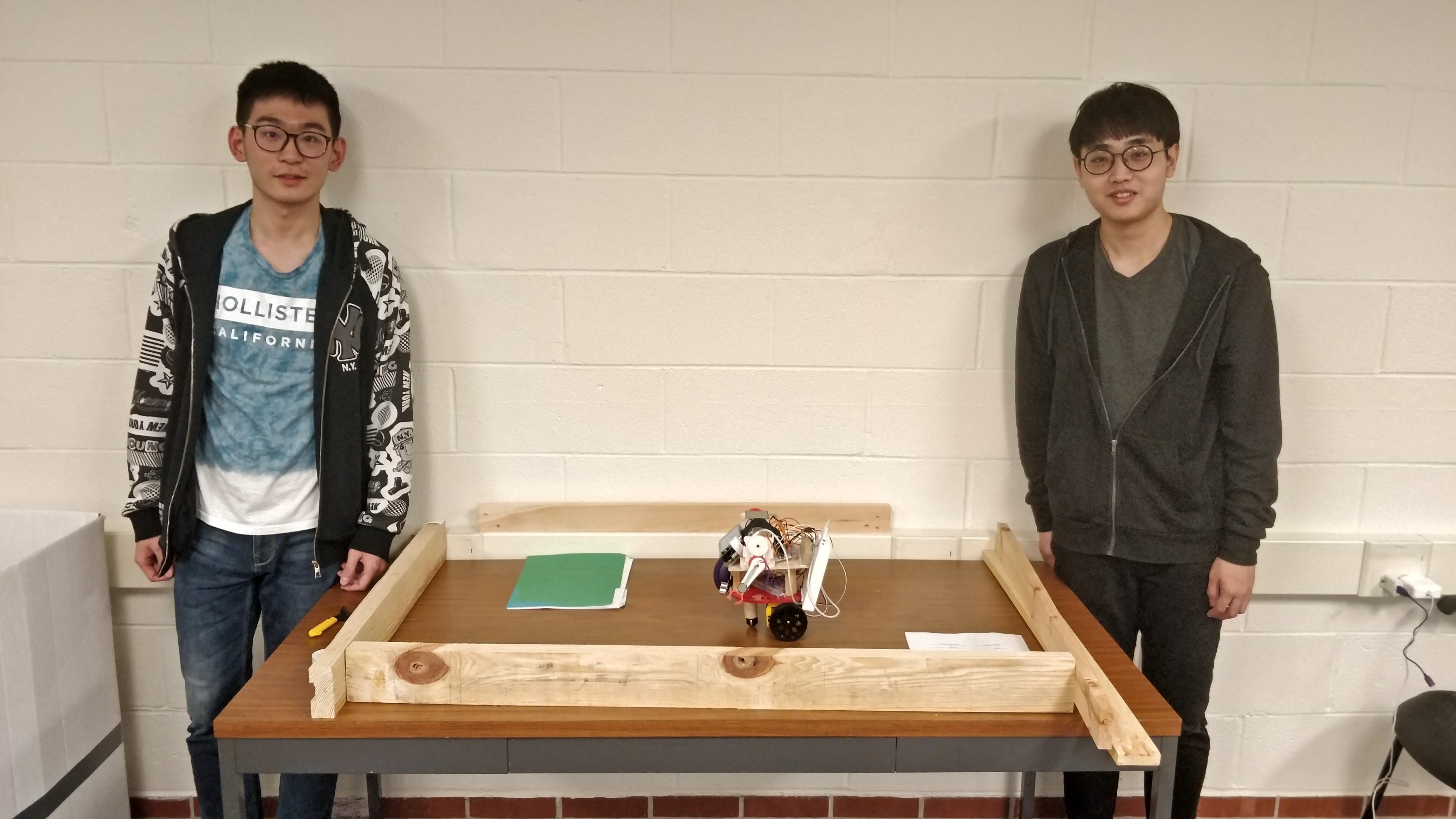

Project By Xinyu Wang(xw474), Mingyang Yang(my464)

May 15th 2018

Project Objective

- An entertaining humanoid robot with various emotions including “happy”, “angry”, “sad”, “bored” and “wander” related to different functions

- Hand gesture recognition system for “Paper, Scissor, Rock” game

- Human-Robot interaction with pressure sensor and speech player

- Simple music player and Users-Friendly GUI

- Automatic movement with obstacle avoidance

Introduction

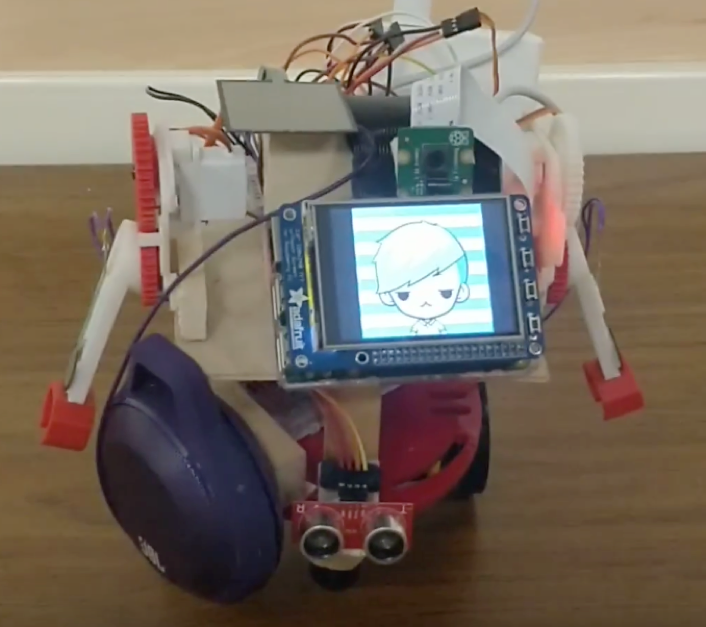

We built an entertaining humanoid robot based on Raspberry Pi. Our purpose is to entertain people with its cute appearance and different functions. We designed four emotions for the cute robot, namely happy, sad, angry, bored. The emotions appear randomly and are connected by wander mode. The robot has different functions for different emotions.

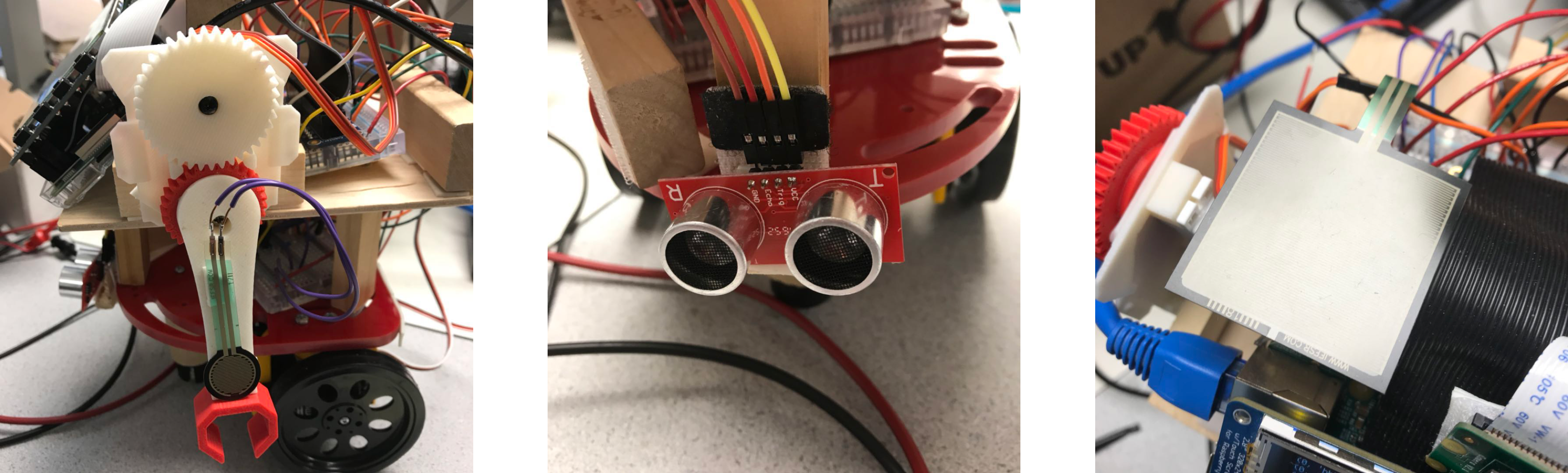

Hardware Design

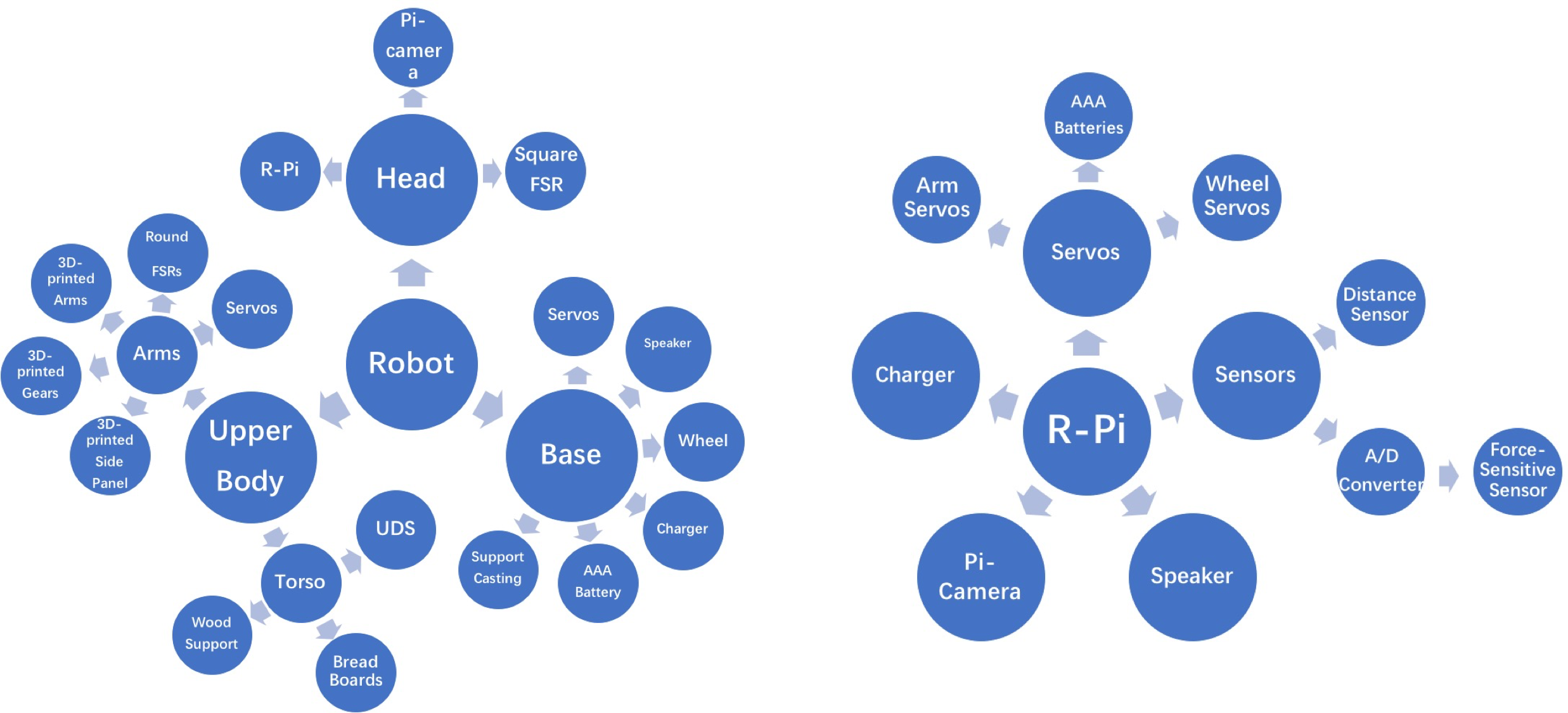

From the structural point of view, our hardware is shown in left figure. It consists of three main parts, namely the head, the upper body and the base. For the head, there are pi-camera, square force-sensitive resistor (SFSR) and R-pi, which displays interfaces and expressions. For the upper body, there are arms and the torse. For the arms, there are arm servos, round force-sensitive resistors (RFSR), 3D-printed arms, hands, arm gears, servo gears and head side panels. For the torso, there are ultrasonic distance sensor (UDS), wood support structures and bread boards. For the base, there are charger, speaker, wheel servos, wheels, support casting and AAA batteries.

From the functional point of view, our hardware is shown in right figure. There are five main components, which are servos, sensors, speaker, charger and pi-camera. For the servos, there are arm servos, wheel servos and AAA batteries. For the sensors, there are force-sensitive resistors and ultrasonic distance sensors.

Component hardware structure Function hardware structure

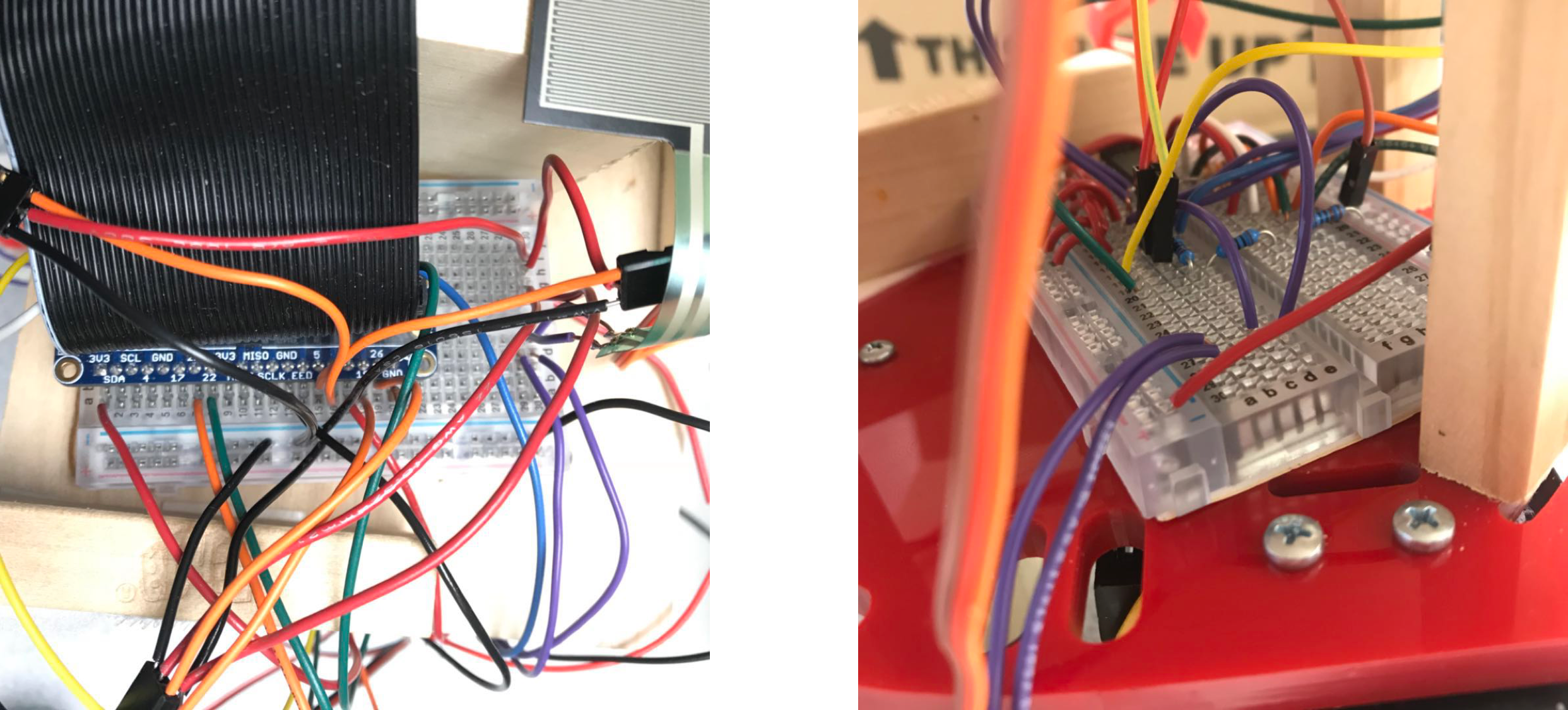

Circuit Design

Sensor Installation

Software Design

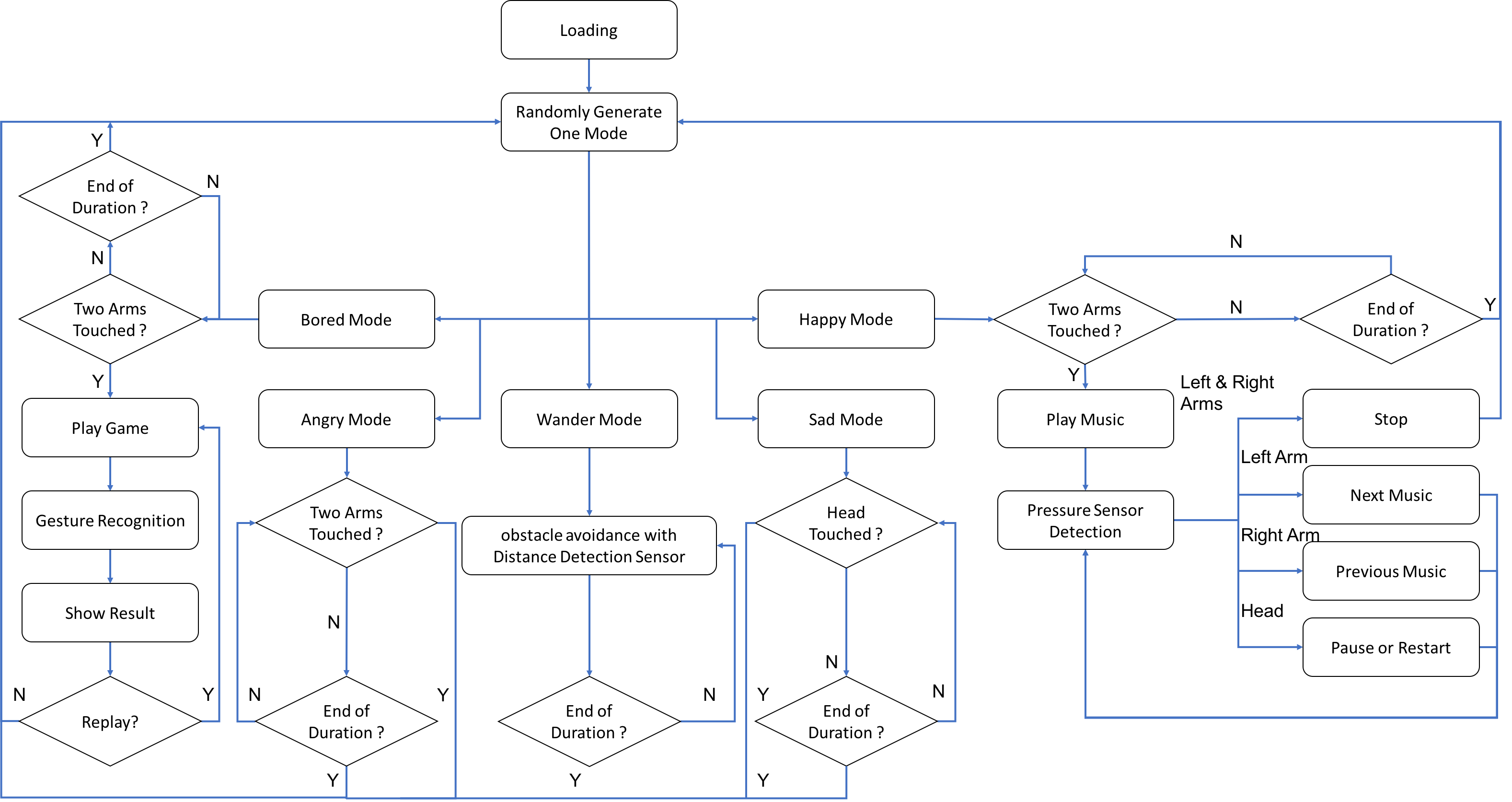

The robot has 5 modes including happy, sad, angry, bored and wander. And in each modes, it contains different interaction with people. The overall structure of robot’s modes is shown below.

Overall Function Structure

Robot Interaction Design

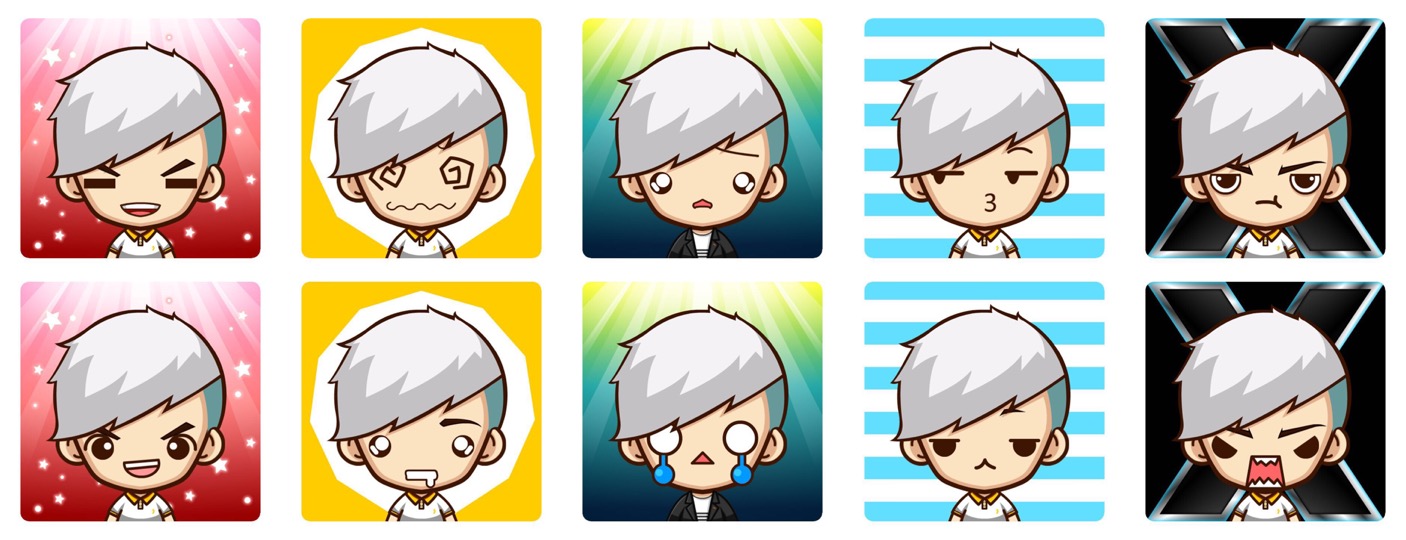

For each mode, robot has different interaction with people and it can be divided into face, voice and control. For face module, we designed two consequent face images with SuperMe, an app in mobile phone, and used PyGame to show them circularly to generate animation effect. For each mode, it contains different face animation, which is shown in below Figures. For voice module, we used festival library to generate voice according to text. For example, when robot feels sad, it will say “I just failed my prelim, I am so upset”. The interaction was developed with 3 pressure sensors: One is placed on its head and other two sensors were placed on its arms. Each sensor plays different role in each mode.

- Happy: Touching left and right sensors to trigger the music player

- Bored: Touching left and right sensors to trigger the game

- Sad: Touching head sensor to quit this mode

- Angry: Touching both of left and right sensors to quit this mode

- Wander: Automatically move

Hand Gesture Recognition

Gesture Recognition

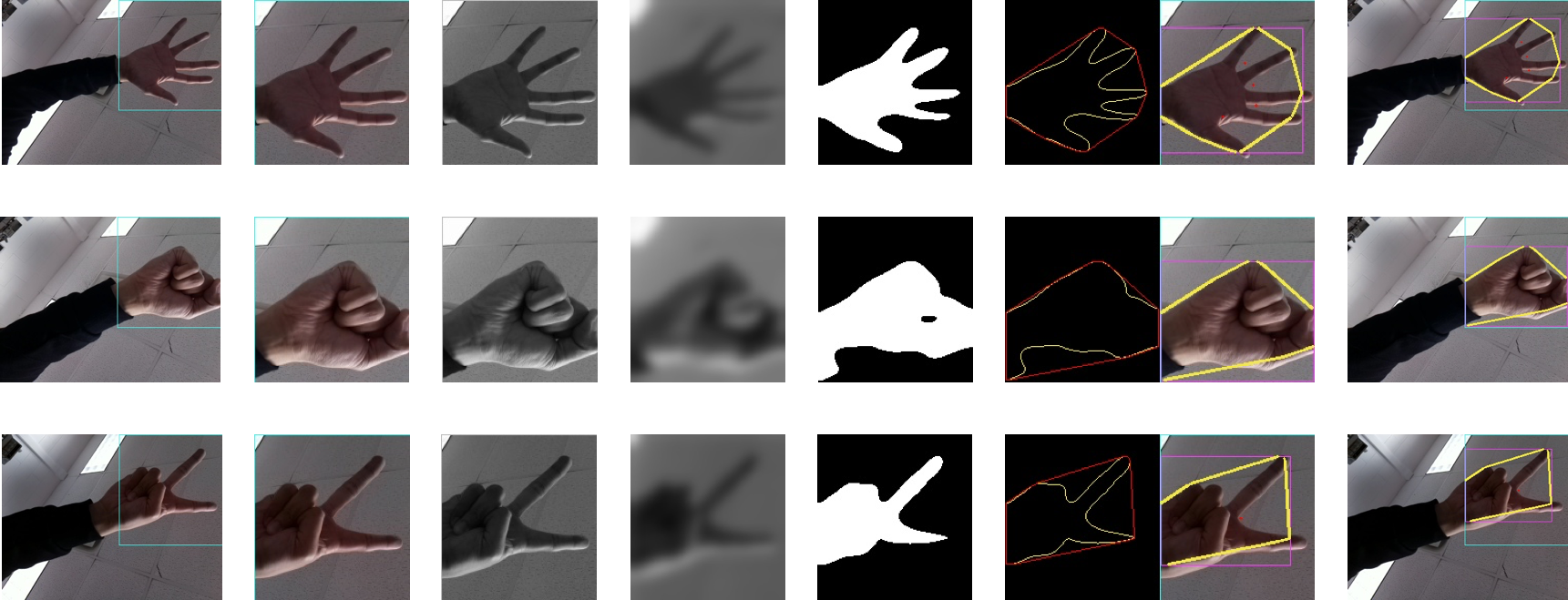

It is related to bored mode. The hand gesture recognition was developed under OpenCV library. The main idea is to capture an image and recognize how many finger it has to decide which gesture it is. The weakness of this idea is that we have to seperate five fingers to show paper. We developed the image capturing under PiCamera library. To make the image capturing more frequently, we used video mode of PiCamera and an array to store the current frame. The hand recognition process was implemented in this array. After obtaining that array, some pre-process should be implemented including cropping and Gaussian blurring. The cropping was used to set a small area of image and in this area, hand recognition will be implemented. The Gaussian blurring was used to remove noise from image to improve the accuracy. Based on the hand recognition idea, we found the maximum area of foreground and extracted the contour of that area under OpenCV library. This step requires that the background of image should be clean and the total white background can generate the most precise result. With this condition, the foreground should be users’ hand and the maximum area must related to hand. With the contour of hand, we separated the foreground (Users’ hand) and background into white and black image. Instead of calculating how many fingers, the idea was converted to recognize how many concave the hand contains. However, in practice, we calculated how many convex of background (equals to how many concave of hand gesture) to decide which hand gesture it should be. For scissor, paper and rock hand gesture, it should has one, four and zero concave of hand. The hand gesture recognition results are shown in below Figure.

Hand Gesture Recognition

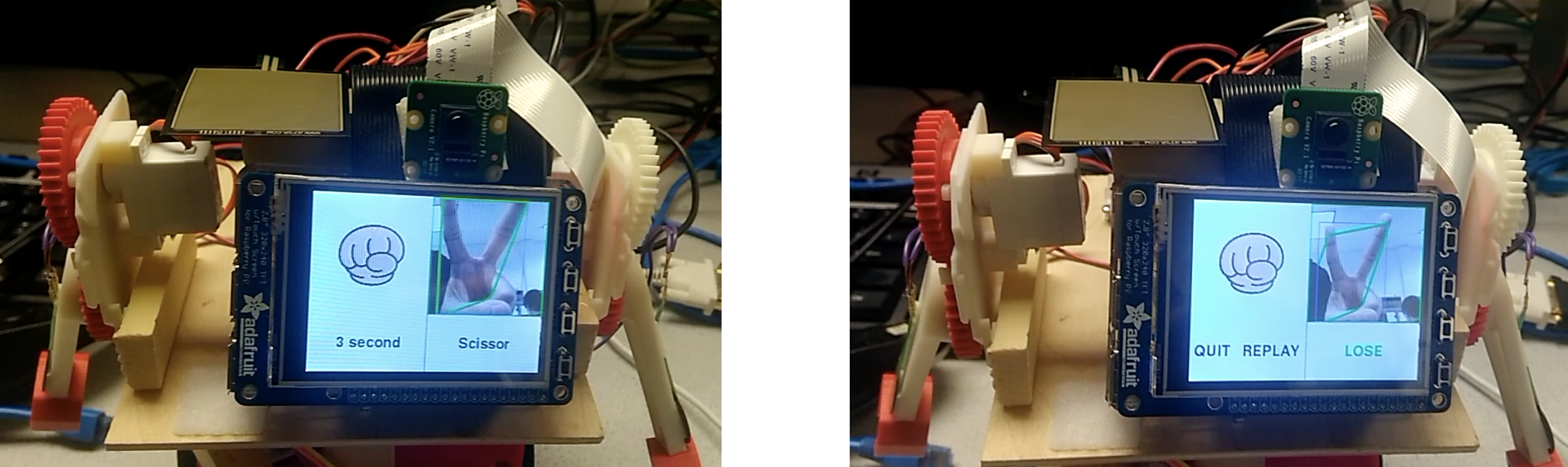

With hand gesture recognition system, we designed game interface and action with PyGame. In the screen, the small area captured by PiCamera was designed on the right top. To make an users-friendly interface, a gesture recognition result was designed below the PiCamera image area. With this design, users can adjust their hand gesture to make sure that robot makes a right hand recognition. On the left side of screen, the robot’s choice was put on the left top corner and it will change continuously until the time is up in this round. On the left bottom of screen, a time counter was developed. For one round, users have 5 seconds to decide their choice. When time is up, robot will judge the result and display different reactions.

Game Interface

For reactions design, it contains face animation and movement. Like shown above, the face animation was developed to show two consequent images continuously. For movement, we used four servos to control robot’s arms and wheels. The wheel servos are continuous rotation servos, which can be used by PWM signal with different frequency. And we designed several function to control the servo make clockwise, counter-clockwise and stop rotation. For the arm servo, different frequency can control the rotation angle so we designed function to control which position the arm should be. With above functions to control the servos. We implemented different reactions for thress results: users win, users lose and users tie.

- users win: robot will shake its arms in a small angle and display a crying face animation

- users lose: robot will run in a circle with swing arms and display a very happy face animation

- users tie: robot will move forward and backward with swing arms severals time and display a frown face animation

After action, the robot’s choice and users’ choice were designed to show on the left top and right top of screen. The game result from users’ viewpoint will be shown on the right bottom corner. And two buttons “quit” and “replay” are on the left bottom of piTFT and users can make their decision. If users choose replay, this game will restart for a new round. The quit button can finish bored mode.

Music Player

When the robot enters the happy mode, we want it to become a music player. In the music player, we included six songs for our current music player, all of which are cheering and refreshing. Three of them are from Disney movies, namely Frozen, Tangled and Moana. The others are from encouraging movies, namely High School Musical, Hannah Montana and La La Land. We want the user to be influenced by the happy atmosphere when listening to these musics. For a music player to work, it needs to include at least the following functions: next song, previous song, pause/restart and quit. The piTFT screen should display the happy expression while playing the music. For the hardware part, we use the Force-Sensitive Resistors, including the square one on top of its head and the round ones attached to its arms. The reason why we didn’t use the buttons right next to the piTFT screen is that we didn’t have enough GPIOs, so we use GPIO 22, 23 and 27 to control other components, which are supposed to control the buttons originally. When you touch the robot’s left arm, it moves to the next song. When you touch the robot’s right arm, it moves to the previous song. When you touch its head, the music pauses. When you touch it head again, the music restarts. When you touch both of its arms at the same time, you can quit the music player, meanwhile quitting the happy mode. For the software part, the music player was developed with PyGame. As is mentioned above, the music player includes the following functions: next song, previous song, pause/restart and quit. These functions are all available in library in Pygame.music. What we have to do is to detect the sensors instantaneously. And when users touch the sensor/sensors, the music player should react quickly and accurately. It’s part of the human-robot interaction that we intend to include at the first thought of our robot project. Any form of delay would cause unpleasant user experience with different degree and that’s what we want to exclude to the largest extent.

Obstacle Avoidance

We’ve designed four emotions, namely happy, sad, angry and bored. But we need some mode to fill in the gaps between the expression. It makes no sense for the robot to enter another emotion immediately after it finishes one emotion. For this purpose, we designed the wander mode, during which the humanoid robot wanders off randomly on the ground. For this mode to work appropriately, one important function is to avoid barriers and obstacles, ensuring the safety of the robot. To achieve this function, we need to work on both hardware and software.

For the hardware, we need distance sensor. The most appropriate sensor is the ultrasonic distance sensor, since it is available in lab and easy to understand and use. This sensor is based on simple physical principle, which is distance is equal to speed times sound. Since we are not designing a robot that focus on circumventing obstacles and barriers, we just installed one ultrasonic distance sensor in the front.

For the software, we designed our algorithm and test it within an area enclosed by wood. Basically, our algorithm works as follows. We have four directions for it to move along, namely forward, backward, left and right. Each time before it moves, it detects the distance between its front and the nearest obstacle in the front. If it’s larger than 14 inches, there’s enough room to move forward. So the robot moves forward. If the distance is less than 6 inches, there’s no enough room for it to either move forward or make turns. So in this case, it moves backward. When the distance is between 6 and 14 inches, it turns left or right. So here comes the question: how can we decide which direction to turn into? To solve this, we record the distance in the previous cycle, if the distance in the current cycle is bigger than that in previous cycle, congratulation, it’s the right direction. Otherwise, choose the opposite direction to turn into. Stop turning left or right once the distance is larger than 14 inches again. This works for most cases. But for some extreme angles or very restricted space, it is not robust enough, since the lateral and rear parts may run into the obstacles in these cases. This can be solved by adding more ultrasonic distance sensors at the side and the rear of the robot.

Wander Mode

Testing

Hand Gesture Recognition

The idea of hand gesture recognition is to detect hand in a clean background. At first, to achieve this function, our idea was to capture background first, and capture users’ hand gesture with same background. We could use the second image to subtract the background image to get hand gesture. However, we rejected this idea for two reasons. The first reason is that when we tried this idea, the subtraction result was not as good as we thought because in some situation, the hand gesture was not complete in subtraction result. Second, this idea might bring a worser game experience. As a result, we chose the current idea to achieve hand gesture recognition

In this part, at first we chose to use capture function of PiCamera to obtain each frame and make hand gesture recognition. The problem was that each game round, users have 5 seconds to adjust their hand gesture, with capture function, the captured frames were not smooth, which produced a terrible users experience. To fix this problem, we revised the way camera capturing frames to use continuous capture function of PiCamera to obtain the frame buffer and make hand gesture recognition, which can generate a smooth frames capture.

Pressure Sensor

We used three pressure sensor to control the robot. In order to convert analog signal detected by pressure sensor to digit signal, we used a chip called MCP3008 to achieve AD converting. At first, we referred some demos about how to use pressure sensor and MCP3008 but it required lots of code to implement it. Then, we read several descriptions of MCP3008 and found a library of MCP3008 in adafruit. Therefore, we designed program for pressure sensor with that library.

Distance Sensor

The distance sensor was designed for wander mode. After we tested the relationship between wheel servos and running time so that we could control movement distance by setting servos’ running time, we decided that when the distance is under 35cm, the robot should not move forward. In some situation, the robot was placed very close to the obstacle but it continued to move forward. We tested the distance sensor and found that when the obstacle was very close to the sensor, the detected distance was not near 0 but about 14 yards. Therefore, we revised the program to make sure when detected distance is over 14 yards, the robot can regard it as 0.

Result

Demonstration Video

Generally, the performance of our entertaining robot meets our expectation. All five modes works satisfactorily. For each mode, there is an animation with two faces with similar expression showing on the piTFT. There’s also a vocal message to indicate which mode it is in right now. For the bored mode, the message is ‘I have nothing to do, can you play a game with me?’. For the angry mode, the message is ‘I’m not in a good mood. I am about to explode. I need a hug.’ For the happy mode, the message is ‘I’m very happy right now, i can sing s song for you.’ For the sad mode, the message is ‘I just failed my prelim. I am so upset.’

When it enters the bored mode, it will ask you whether you want to play a game with it or not. You can agree to play by pressing sensors on both arms and refuse to play by doing nothing. Our gesture recognition algorithm works well with white backgrounds. When you wins and it loses, it will show a sad face animation and shake both of its arms just like a child. When you loses and it wins, it shows off by shaking both of arms and spins, with a happy face animation displayed on piTFT. When there’s a tie, it shows a frowning face and moves back and forth. You can also choose to replay and to quit by using the interface. If you choose to quit, it automatically chooses the next mode. The angry mode is relatively simple. After it indicates the message, you can touch both of its arm sensors to give it a hug and this mode will end. When it enters happy mode, you can touch both of its arm sensors to change the robot into a music player. Press the right arm sensor to move to the previous song. Press the left arm sensor to move to the next song. Press the head sensor to pause the music. Press the head sensor again to restart the music. Press both of its arm sensors again to quit the happy mode. The sad mode is another simple mode. After it indicates the message, you can touch its head just like petting a cat or dog to cheer it up. This mode will end after you do so. The mode between each of the four expressions is the wander mode, in which the robot wanders automatically without any collision with the barriers and obstacles such as walls.

Overall, all of our components, including hardware and software, works as we expected.

Conclusion

To sum up, our main component works really well. To be more specific, our core functions, including gesture recognition, music player, barrier and obstacle avoidance, pygame animation, GUI/Interface and text-to-speech (TTS) interacts with each other very well in our entertaining humanoid robot.

However, modifications and improvements can be made to enhance our robot. For the hardware, instead of wood support structures, we can use the laser-cut counterparts. We can also modify the layout of our hardware components so that the robot will loo more dense. We can also improve our design so that it walks more stably. For the software, we can improve our gesture recognition algorithm to make it more robust. We can add more distance sensors to make our obstacle avoidance algorithm more accurate.

Future Work

Hardware

First, we may reassemble our robot to make it more dense and balanced. Since the charger and the speaker is attached to the robot on the last day, so the robot seems a little unbalanced when we demo our project. Second, laser-cut support structures can be used instead of wood counterparts. Third, we may add two or three more ultrasonic distance sensors to improve our obstacle avoidance algorithm. It is now somehow limited since we only installed one ultrasonic distance sensor in the front. Therefore, there is probability that the lateral and the rear may collide with the obstacles and barriers. Fourth, we want to add more servos and we want to add another degree of freedom to the arms.

Software

First, for our gesture recognition algorithm, it can be more robust. We can modify it so that it can not only distinguish gestures shown in white background, but also distinguish gestures in backgrounds with noises. Second, the text-to-speech (TTS) function can be improved. We’ve tried to find the voice that resembles a child for several days, but no progress has been made on this part. We just used the male adult voice with adjusted pitch and speed. With more time available, we can get into more TTS libraries and maybe a more suitable voice can be found. Third, the speech-to-text (STT) function can be added. This is the function we didn’t think of at first. As we progress, we find it may helpful to our project if we add this function. With the limited time, we are not able to work this function out appropriately. If we have more time, we want to add this function. With the TTS and STT functions, we may probably further design human-robot interaction. Fourth, more reactions can be added to our robot. The current actions for our robot is a little bit simple due to our structure. We can make it to be more complex.

Work Distribution

Project group picture

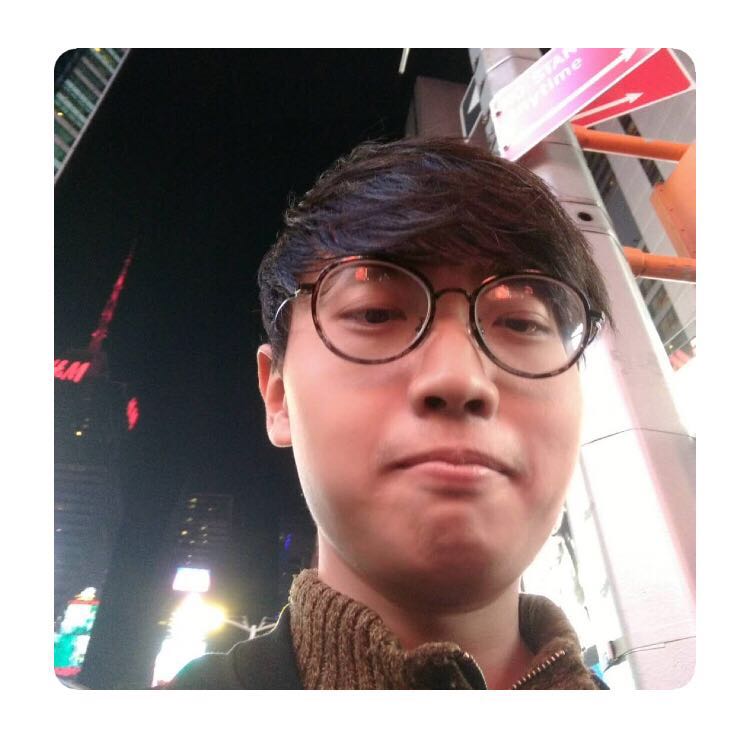

Xinyu Wang

xw474@cornell.edu

Developed algorithm and software program Helped with the hardware system

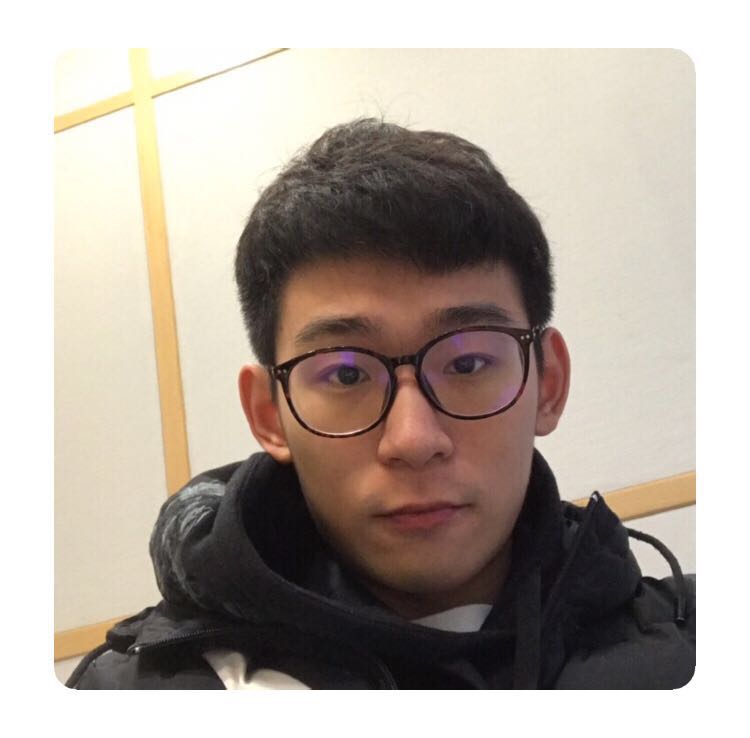

Mingyang Yang

my464@cornell.edu

Designed and built the hardware system Helped with algorithm and software program

Parts List

- Square Force-Sensitive Resistor * 1 = $7.95

- Round Force-Sensitive Resistor * 2 = $14.00

- HobbyKing 15178 Analog Servo * 2 = $12.80

- Raspberry Pi Pi-Camera * 1 available in lab

- Wheels and Wheel Servos * 2 available in lab

- Portable Charger * 1 available in lab

- Speaker * 1 available in lab

- A/D converter MCP3008 * 1 available in lab

- AAA Batteries * 4 available in lab

- Bread Boards * 2 and Circuit Components available in lab

Total: $34.75

References

PiCamera DocumentPygame Documentation

Arm Servo Documentation

Ultrasonic Distance Sensor Wiring and Usage

Force Sensitive Resistor Wiring and Usage

A/D Converter Usage

Gesture Recognition Algorithm

Code Appendix

Demo.py

from two_wheel import *

from GAME_ACTION import *

from PET_ASBH import *

from PET_WANDER import *

import random

import os

import subprocess

import pygame

import cv2

import numpy as np

import math

import time

from picamera import PiCamera

from picamera.array import PiRGBArray

import RPi.GPIO as GPIO

GPIO.setmode(GPIO.BCM)

GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP)

def GPIO17_callback(channel):

clean()

exit()

GPIO.add_event_detect(17, GPIO.FALLING, callback=GPIO17_callback, bouncetime=300)

os.putenv('SDL_VIDEODRIVER', 'fbcon')

os.putenv('SDL_FBDEV', '/dev/fb1')

os.putenv('SDL_MOUSEDRV', 'TSLIB')

os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen')

'''pitft'''

screen = pygame.display.set_mode((320, 240))

pygame.mouse.set_visible(False)

black = 0,0,0

pygame.init()

my_font = pygame.font.Font(None, 30)

WHITE = 255,255,255

gesture = ["Rock", "Scissor", "Paper"]

'''PC choice image'''

pc_paper = pygame.image.load("/home/pi/project/picture/paper.png")

pc_paper = pygame.transform.scale(pc_paper, (100, 100))

pc_paper_rect = pc_paper.get_rect(center=(80, 80))

pc_rock = pygame.image.load("/home/pi/project/picture/rock.png")

pc_rock = pygame.transform.scale(pc_rock, (100, 100))

pc_rock_rect = pc_rock.get_rect(center=(80, 80))

pc_scissor = pygame.image.load("/home/pi/project/picture/scissor.png")

pc_scissor = pygame.transform.scale(pc_scissor, (100, 100))

pc_scissor_rect = pc_scissor.get_rect(center=(80, 80))

'''GAME BUTTONS'''

quit_game = "QUIT"

quit_game_surface = my_font.render(quit_game, True, black)

quit_game_rect = quit_game_surface.get_rect(center=(30, 200))

replay_game = "REPLAY"

replay_game_surface = my_font.render(replay_game, True, black)

replay_game_rect = replay_game_surface.get_rect(center=(110, 200))

'''camera'''

camera = PiCamera()

camera.resolution = (320, 240)

camera.framerate = 24

camera.rotation = 180

time.sleep(2)

video = PiRGBArray(camera)

#pc_choice = 0

#start_time = time.time()

init_servo()

Q_TWO = False

'''Pet Mode'''

statues = ["BORED", "ANGRY", "WANDER", "HAPPY", "SAD"]

walk_time = time.time()

''''''

take_game = False

demo_count = 0

demo = [0, 1, 3, 4, 2]

#demo = [2]

while True :

screen.fill(black)

#statue = int(random.random() * 10) % 5

statue = demo[demo_count]

demo_count = (demo_count + 1) % len(demo)

#if(time.time() - walk_time < 2 or take_game):

# statue = 2

# take_game = False

time.sleep(3)

print(statues[statue])

if(statue == 1):

pet_angry(screen, 15)

elif(statue == 2):

pet_wander(screen, 60)

elif(statue == 3):

pet_happy(screen, 15)

elif(statue == 4):

pet_sad(screen, 15)

elif(statue == 0):

Q_TWO = pet_bored(screen, 15)

if(statue != 2):

walk_time = time.time()

while Q_TWO:

take_game = True

pc_choice = 0

start_time = time.time()

for frameBuf in camera.capture_continuous(video, format='rgb', use_video_port=True):

k = cv2.waitKey(10)

if k == 27:

print("hh")

break

image = np.rot90(frameBuf.array)

video.truncate(0)

img = video.array

cv2.rectangle(img, (0, 160), (160, 0), (0,255,0),1)

crop_img = img[0:160, 0:150]

grey = cv2.cvtColor(crop_img, cv2.COLOR_BGR2GRAY)

value = (35, 35)

blurred = cv2.GaussianBlur(grey, value, 0 )

_, thresh1 = cv2.threshold(blurred, 127, 255,

cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU)

contours, hierarchy = cv2.findContours(thresh1.copy(),cv2.RETR_TREE, \

cv2.CHAIN_APPROX_NONE)

cnt = max(contours, key = lambda x: cv2.contourArea(x))

x, y, w, h = cv2.boundingRect(cnt)

cv2.rectangle(crop_img, (x, y), (x+w, y+h), (0, 0, 255), 0)

hull = cv2.convexHull(cnt)

drawing = np.zeros(crop_img.shape,np.uint8)

cv2.drawContours(drawing, [cnt], 0, (0, 255, 0), 0)

cv2.drawContours(drawing, [hull], 0,(0, 0, 255), 0)

hull = cv2.convexHull(cnt, returnPoints=False)

defects = cv2.convexityDefects(cnt, hull)

count_defects = 0

cv2.drawContours(thresh1, contours, -1, (0, 255, 0), 3)

for i in range(defects.shape[0]):

s,e,f,d = defects[i,0]

start = tuple(cnt[s][0])

end = tuple(cnt[e][0])

far = tuple(cnt[f][0])

a = math.sqrt((end[0] - start[0])**2 + (end[1] - start[1])**2)

b = math.sqrt((far[0] - start[0])**2 + (far[1] - start[1])**2)

c = math.sqrt((end[0] - far[0])**2 + (end[1] - far[1])**2)

angle = math.acos((b**2 + c**2 - a**2)/(2*b*c)) * 57

if angle <= 90:

count_defects += 1

cv2.circle(crop_img, far, 1, [0,0,255], -1)

cv2.line(crop_img,start, end, [0,255,0], 2)

all_img = np.hstack((drawing, crop_img))

img = np.rot90(img)

#img = np.flip(img, 0)

img_final = pygame.surfarray.make_surface(img)

screen.fill(black)

screen.blit(img_final, (0,0))

choice_bg = pygame.Rect((159, 161), (160, 80))

pygame.draw.rect(screen, WHITE, choice_bg)

pc_choice_bg = pygame.Rect((0, 0), (158, 240))

pygame.draw.rect(screen, WHITE, pc_choice_bg)

choose = 0

if(count_defects == 0):

choose = 0

elif(count_defects == 1 or count_defects == 2):

choose = 1

else:

choose = 2

choice = gesture[choose]

choice_surface = my_font.render(choice, True, black)

choice_rect = choice_surface.get_rect(center=(240,200))

screen.blit(choice_surface, choice_rect)

if(pc_choice == 0):

screen.blit(pc_rock, pc_rock_rect)

elif(pc_choice == 1):

screen.blit(pc_scissor, pc_scissor_rect)

else:

screen.blit(pc_paper, pc_paper_rect)

pc_choice = (pc_choice + 1) % 3

cur_time = 5 - (int)(time.time() - start_time)

if(cur_time >= 0):

cur_time_text = str(cur_time) + " second"

else:

cur_time_text = "0 second"

time_surface = my_font.render(cur_time_text, True, black)

time_rect = time_surface.get_rect(center=(80, 200))

screen.blit(time_surface, time_rect)

pygame.display.flip()

if(cur_time == -1):

break

#pygame.display.update()

#print('Number of Fingers:', count_defects+1)

result = 1

if((pc_choice == 0 and choose == 2) or (pc_choice == 1 and choose == 0) or (pc_choice == 2 and choose == 1)):

result = 0

elif(pc_choice != choose):

result = 2

result_text = ""

if(result == 0):

result_text = "WIN"

result_text_surface = my_font.render(result_text, True, (255, 0, 0))

elif(result == 1):

result_text = "TIE"

result_text_surface = my_font.render(result_text, True, (0, 0, 255))

else:

result_text = "LOSE"

result_text_surface = my_font.render(result_text, True, (0, 255, 0))

game_action(screen, result_text)

Q_GAME = True

while Q_GAME:

for event in pygame.event.get():

x = 0

y = 0

if(event.type is pygame.MOUSEBUTTONDOWN):

pos = pygame.mouse.get_pos()

x,y = pos

elif(event.type is pygame.MOUSEBUTTONUP):

pos = pygame.mouse.get_pos()

x,y = pos

if(y > 140 and y < 220):

if(x > 0 and x < 80):

Q_TWO = False

Q_GAME = False

if(y > 140 and y < 220 and x > 80 and x < 160):

Q_GAME = False

screen.fill(black)

screen.blit(img_final, (0, 0))

pygame.draw.rect(screen, WHITE, choice_bg)

pygame.draw.rect(screen, WHITE, pc_choice_bg)

if(pc_choice == 0):

screen.blit(pc_rock, pc_rock_rect)

elif(pc_choice == 1):

screen.blit(pc_scissor, pc_scissor_rect)

else:

screen.blit(pc_paper, pc_paper_rect)

result_text_rect = result_text_surface.get_rect(center=(240, 200))

screen.blit(result_text_surface, result_text_rect)

screen.blit(quit_game_surface, quit_game_rect)

screen.blit(replay_game_surface, replay_game_rect)

pygame.display.flip()

time.sleep(0.2)

clean()

two_wheel.py

import time

import RPi.GPIO as gpio

def cal_freq(t):

return 1.0 / (t + 20) * 1000

def cal_dc(t):

return t / (t + 20) * 100

gpio.setmode(gpio.BCM)

gpio.setup(19, gpio.OUT)

gpio.setup(26, gpio.OUT)

p_left = gpio.PWM(26, 1)

p_right = gpio.PWM(19, 1)

def init_servo():

p_left.start(0)

p_right.start(0)

clock_max = 1.3

counter_clock_max = 1.7

def two_wheel(n, d):

if(n == "Left_servo" and d == "clockwise"):

p_left.ChangeFrequency(cal_freq(clock_max))

p_left.ChangeDutyCycle(cal_dc(clock_max))

elif(n == "Left_servo" and d == "counter_clockwise"):

p_left.ChangeFrequency(cal_freq(counter_clock_max))

p_left.ChangeDutyCycle(cal_dc(counter_clock_max))

elif(n == "Right_servo" and d == "clockwise"):

p_right.ChangeFrequency(cal_freq(clock_max))

p_right.ChangeDutyCycle(cal_dc(clock_max))

elif(n == "Right_servo" and d == "counter_clockwise"):

p_right.ChangeFrequency(cal_freq(counter_clock_max))

p_right.ChangeDutyCycle(cal_dc(counter_clock_max))

elif(n == "Left_servo" and d == "stop"):

p_left.ChangeFrequency(1)

p_left.ChangeDutyCycle(0)

elif(n == "Right_servo" and d == "stop"):

p_right.ChangeFrequency(1)

p_right.ChangeDutyCycle(0)

#print "Servo number: " + n + ", direction: " + d + "."

def clean():

p_left.stop()

p_right.stop()

gpio.cleanup()

test_servo.py

import time

import RPi.GPIO as GPIO

def cal_freq(t):

return 1.0 / (t + 20) * 1000

def cal_dc(t):

return t / (t + 20) * 100

def change(servo, t):

servo.ChangeFrequency(cal_freq(t))

servo.ChangeDutyCycle(cal_dc(t))

GPIO.setmode(GPIO.BCM)

GPIO.setup(6, GPIO.OUT)

GPIO.setup(5, GPIO.OUT)

servo_left = GPIO.PWM(6, 1)

servo_right = GPIO.PWM(5, 1)

def init_arms():

servo_left.start(0)

servo_right.start(0)

def forw(one_servo):

if(one_servo == "Left"):

change(servo_left, 1.0)

else:

change(servo_right, 1.0)

def swing(one_servo, fr):

if(one_servo == "Left"):

change(servo_left, fr)

else:

change(servo_right, fr)

def back(one_servo):

if(one_servo == "Right"):

change(servo_right, 2.0)

else:

change(servo_left, 2.0)

def mid(one_servo):

if(one_servo == "Right"):

change(servo_right, 1.5)

else:

change(servo_left, 1.5)

def stop_arms():

servo_left.ChangeFrequency(1)

servo_left.ChangeDutyCycle(0)

servo_right.ChangeFrequency(1)

servo_right.ChangeDutyCycle(0)

def clean_arms():

servo_left.stop()

servo_right.stop()

GPIO.cleanup()

GAME_ACTION.py

from two_wheel import *

from test_servo import *

import time

import pygame

def game_action(screen, result):

tt1 = 5

tt2 = 1

tt3 = 0.4

init_arms()

win1 = pygame.image.load("/home/pi/project/picture/win1.jpeg")

win1 = pygame.transform.scale(win1, (240, 240))

win1_rect = win1.get_rect(center=(160, 120))

win2 = pygame.image.load("/home/pi/project/picture/win2.jpeg")

win2 = pygame.transform.scale(win2, (240, 240))

win2_rect = win2.get_rect(center=(160, 120))

lose1 = pygame.image.load("/home/pi/project/picture/lose1.jpeg")

lose1 = pygame.transform.scale(lose1, (240, 240))

lose1_rect = lose1.get_rect(center=(160, 120))

lose2 = pygame.image.load("/home/pi/project/picture/lose2.jpeg")

lose2 = pygame.transform.scale(lose2, (240, 240))

lose2_rect = lose2.get_rect(center=(160, 120))

tie1 = pygame.image.load("/home/pi/project/picture/tie1.jpeg")

tie1 = pygame.transform.scale(tie1, (240, 240))

tie1_rect = tie1.get_rect(center=(160, 120))

tie2 = pygame.image.load("/home/pi/project/picture/tie2.jpeg")

tie2 = pygame.transform.scale(tie2, (240, 240))

tie2_rect = tie2.get_rect(center=(160, 120))

screen.fill((0,0,0))

start_time = time.time()

if(result == "WIN"):

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

sig = 0

while(time.time() - start_time < tt1):

if(sig == 0):

screen.blit(lose1, lose1_rect)

sig = 1

swing("Left", 1.4)

swing("Right", 1.6)

else:

screen.blit(lose2, lose2_rect)

sig = 0

swing("Left", 1.6)

swing("Right", 1.4)

pygame.display.flip()

time.sleep(tt3)

elif(result == "LOSE"):

two_wheel("Right_servo", "counter_clockwise")

two_wheel("Left_servo", "counter_clockwise")

sig = 0

while(time.time() - start_time < 3.8):

if(sig == 0):

screen.blit(win1, win1_rect)

sig = 1

forw("Left")

forw("Right")

time.sleep(0.3)

else:

screen.blit(win2, win2_rect)

sig = 0

back("Left")

back("Right")

time.sleep(0.4)

pygame.display.flip()

elif(result == "TIE"):

while(time.time() - start_time < tt1):

two_wheel("Right_servo", "counter_clockwise")

two_wheel("Left_servo", "clockwise")

t1 = time.time();

screen.blit(tie1, tie1_rect)

pygame.display.flip()

forw("Left")

back("Right")

while(time.time() - t1 < tt2):

pass

stop_arms()

time.sleep(0.5)

two_wheel("Right_servo", "clockwise")

two_wheel("Left_servo", "counter_clockwise")

t1 = time.time()

screen.blit(tie2, tie2_rect)

pygame.display.flip()

forw("Right")

back("Left")

while(time.time() - t1 < 0.8):

pass

stop_arms()

time.sleep(0.5)

two_wheel("Left_servo", "stop")

two_wheel("Right_servo", "stop")

stop_arms()

PET_ASBH.py

import time

# Import SPI library (for hardware SPI) and MCP3008 library.

import Adafruit_GPIO.SPI as SPI

import Adafruit_MCP3008

import pygame

from test_servo import *

import os

import subprocess

import RPi.GPIO as GPIO

GPIO.setmode(GPIO.BCM)

GPIO.setup(21, GPIO.IN, pull_up_down=GPIO.PUD_UP)

def GPIO21_callback(channel):

exit()

GPIO.add_event_detect(21, GPIO.FALLING, callback=GPIO21_callback, bouncetime=300)

# Software SPI configuration:

CLK = 13

MISO = 23

MOSI = 27

CS = 22

mcp = Adafruit_MCP3008.MCP3008(clk=CLK, cs=CS, miso=MISO, mosi=MOSI)

speak = "espeak -a 200 -g 3 -p 99 -s 155 "

def video_control():

ID1 = 0

ID2 = 4

ID3 = 7

PLAY = True

last_state = [False, False]

while PLAY:

values = [0] * 8

values[ID1] = mcp.read_adc(ID1)

values[ID2] = mcp.read_adc(ID2)

values[ID3] = mcp.read_adc(ID3)

print(values[ID1],"hhh", values[ID2])

if(values[ID1] < 700 and values[ID1] >= 150 and values[ID2] < 700 and values[ID2] >= 150 and last_state[0] == False):

cmd = 'echo "quit" > /home/pi/project/video_fifo'

subprocess.check_output(cmd, shell=True)

PLAY = False

last_state[0] = True

last_state[1] = False

def video_play(ind):

videolist = ["bigbuckbunny320p.mp4", "Frozen320.mp4"]

index = ind

play = "python /home/pi/project/whole/control.py & sudo SDL_VIDEODRIVER=fbcon SDL_FBDEV=/dev/fb1 mplayer -vo sdl -framedrop -input file=/home/pi/project/video_fifo "

video = "/home/pi/project/videos/"

os.system(play + video + videolist[index])

def music_play():

musiclist = ["letitgo.mp3", "HM.mp3", "HSM.mp3", "Moana.mp3", "tangled.mp3", "lll.mp3"]

path = "/home/pi/project/videos/"

ID1 = 0

ID2 = 4

ID3 = 7

index = 0

sound_play(path + musiclist[index])

sig = 0

QUIT = True

while QUIT:

values = [0] * 8

values[ID1] = mcp.read_adc(ID1)

values[ID2] = mcp.read_adc(ID2)

values[ID3] = mcp.read_adc(ID3)

#print(values[ID1], "hhh", values[ID2])

if(values[ID1] < 700 and values[ID1] >= 150 and values[ID2] < 700 and values[ID2] >= 150):

sound_stop()

QUIT = False

elif(values[ID1] < 700 and values[ID1] >= 150):

sound_stop()

index = (index + 1) % len(musiclist)

sound_play(path + musiclist[index])

time.sleep(0.5)

elif(values[ID2] < 700 and values[ID2] >= 150):

sound_stop()

index = (index - 1 + len(musiclist)) % len(musiclist)

sound_play(path + musiclist[index])

time.sleep(0.5)

elif(values[ID3] < 700 and values[ID3] >= 150 and sig == 0):

sig = 1

sound_pause()

time.sleep(0.5)

elif(values[ID3] < 700 and values[ID3] >= 150 and sig == 1):

sig = 0

sound_unpause()

time.sleep(0.5)

#print(index)

def sound_stop():

pygame.mixer.music.stop()

def sound_pause():

pygame.mixer.music.pause()

def sound_unpause():

pygame.mixer.music.unpause()

def sound_play(path):

pygame.mixer.init()

clock = pygame.time.Clock()

pygame.mixer.music.load(path)

pygame.mixer.music.play()

#while pygame.mixer.music.get_busy():

# clock.tick(1000)

def pet_happy(screen, duration):

start_time = time.time()

happy1 = pygame.image.load("/home/pi/project/picture/happy1.jpeg")

happy1 = pygame.transform.scale(happy1, (240, 240))

happy1_rect = happy1.get_rect(center=(160, 120))

happy2 = pygame.image.load("/home/pi/project/picture/happy2.jpeg")

happy2 = pygame.transform.scale(happy2, (240, 240))

happy2_rect = happy2.get_rect(center=(160, 120))

ID1 = 0

ID2 = 4

ID3 = 7

sig = 0

firstshow = True

string = '"I am very happy. I can sing a song for you!"'

#os.system(speak+string)

while(time.time() - start_time < duration):

values = [0] * 8

values[ID1] = mcp.read_adc(ID1)

values[ID2] = mcp.read_adc(ID2)

values[ID3] = mcp.read_adc(ID3)

if(sig == 0):

screen.blit(happy1, happy1_rect)

sig = 1

else:

screen.blit(happy2, happy2_rect)

sig = 0

pygame.display.flip()

if (firstshow):

os.system('echo "i am very happy i can sing a song for you" | festival --tts')

firstshow = False

time.sleep(0.2)

if(values[ID1] < 1000 and values[ID1] >= 50 and values[ID2] < 1000 and values[ID2] >= 50):

music_play()

def pet_bored(screen, duration):

start_time = time.time()

bored1 = pygame.image.load("/home/pi/project/picture/bored1.jpeg")

bored1 = pygame.transform.scale(bored1, (240, 240))

bored1_rect = bored1.get_rect(center=(160,120))

bored2 = pygame.image.load("/home/pi/project/picture/bored2.jpeg")

bored2 = pygame.transform.scale(bored2, (240, 240))

bored2_rect = bored2.get_rect(center=(160,120))

ID1 = 0

ID2 = 4

sig = 0

firstshow = True

start_time = time.time()

while(time.time() - start_time < duration):

values = [0] * 8

values[ID1] = mcp.read_adc(ID1)

values[ID2] = mcp.read_adc(ID2)

if(sig == 0):

screen.blit(bored1, bored1_rect)

sig = 1

else:

screen.blit(bored2, bored2_rect)

sig = 0

pygame.display.flip()

time.sleep(0.2)

if(firstshow):

os.system('echo "I have noting to do, can you play game with me?" | festival --tts')

firstshow = False

if((values[ID1] < 700 and values[ID1] >= 150) or (values[ID2] < 700 and values[ID2] >= 150)):

return True

return False

def pet_sad(screen, duration):

start_time = time.time()

Head = True

ID3 = 7

init_arms()

sad1 = pygame.image.load("/home/pi/project/picture/sad1.jpeg")

sad1 = pygame.transform.scale(sad1, (240, 240))

sad1_rect = sad1.get_rect(center=(160, 120))

sad2 = pygame.image.load("/home/pi/project/picture/sad2.jpeg")

sad2 = pygame.transform.scale(sad2, (240, 240))

sad2_rect = sad2.get_rect(center=(160, 120))

sig = 0

start_time = time.time()

firstshow = True

while(time.time() - start_time < duration and Head):

values = [0]*8

values[ID3] = mcp.read_adc(ID3)

if(sig == 0):

screen.blit(sad1, sad1_rect)

sig = 1

forw("Left")

back("Right")

else:

screen.blit(sad2, sad2_rect)

sig = 0

mid("Left")

mid("Right")

pygame.display.flip()

time.sleep(0.2)

if(firstshow):

os.system('echo "I just failed my prelim, I am so upset!" | festival --tts')

firstshow = False

if(values[ID3] < 700 and values[ID3] >= 150):

Head = False

print(values[ID3], "hhh")

def pet_angry(screen, duration):

start_time = time.time()

Hug = True

ID1 = 0

ID2 = 4

init_arms()

angry1 = pygame.image.load("/home/pi/project/picture/angry1.jpeg")

angry1 = pygame.transform.scale(angry1, (240, 240))

angry1_rect = angry1.get_rect(center=(160, 120))

angry2 = pygame.image.load("/home/pi/project/picture/angry2.jpeg")

angry2 = pygame.transform.scale(angry2, (240, 240))

angry2_rect = angry2.get_rect(center=(160, 120))

sig = 0

firstshow = True

while((time.time() - start_time < duration) and Hug):

# Read all the ADC channel values in a list.

values = [0]*8

values[ID1] = mcp.read_adc(ID1)

values[ID2] = mcp.read_adc(ID2)

if(sig == 0):

screen.blit(angry1, angry1_rect)

sig = 1

mid("Left")

mid("Right")

else:

screen.blit(angry2, angry2_rect)

sig = 0

mid("Left")

mid("Right")

pygame.display.flip()

time.sleep(0.2)

if(firstshow):

os.system('echo "I am not in a good mood, I am about to explode! I nned a hug now !" | festival --tts')

firstshow = False

if(values[ID1] < 700 and values[ID1] >= 150 and values[ID2] < 700 and values[ID2] >= 150):

Hug = False

print(values[ID1], "hhh", values[ID2])

PET_WANDER.py

import RPi.GPIO as GPIO

import time

from two_wheel import *

from test_servo import *

import random

import pygame

import os

GPIO.setmode(GPIO.BCM)

TRIG = 12

ECHO = 16

GPIO.setup(TRIG,GPIO.OUT)

GPIO.setup(ECHO,GPIO.IN)

def pet_wander(screen, duration):

start_time = time.time()

init_servo()

init_arms()

wander1 = pygame.image.load("/home/pi/project/picture/wander1.jpeg")

wander1 = pygame.transform.scale(wander1, (240, 240))

wander1_rect = wander1.get_rect(center=(160, 120))

wander2 = pygame.image.load("/home/pi/project/picture/wander2.jpeg")

wander2 = pygame.transform.scale(wander2, (240, 240))

wander2_rect = wander2.get_rect(center=(160, 120))

last_status = -1

last_distance = 1000

pygame.mixer.init()

pygame.mixer.music.load("/home/pi/project/videos/SCG.mp3")

pygame.mixer.music.play()

while (time.time() - start_time < duration):

GPIO.output(TRIG,False)

#print "waiting for the sensor to settle"

time.sleep(0.2)

GPIO.output(TRIG,True)

time.sleep(0.00001)

GPIO.output(TRIG,False)

while GPIO.input(ECHO) == 0:

pulse_start = time.time()

while GPIO.input(ECHO) == 1:

pulse_end = time.time()

pulse_duration = pulse_end - pulse_start

distance = pulse_duration * 17150

distance = round(distance , 2)

'''

if distance < 400 and distance > 2:

print "distance:",distance,"cm"

else:

print "out of range"

'''

status = int(random.random() * 30) % 3

if(distance > 1200):

status = 3

elif(distance > 35):

status = 0

elif(distance <= 15):

status = 3

else:

if(last_distance == -1):

status = 1

elif(last_distance >= distance):

if(last_status == 1 or last_status == 2):

status = 3 - last_status

else:

status = 1

else:

if(last_status == 1 or last_status == 2):

status = last_status

else:

status = 1

last_status = status

last_distance = distance

if (status == 0):

#os.system('echo "' + str(distance) + ', forward" | festival --tts')

two_wheel("Right_servo", "clockwise")

two_wheel("Left_servo", "counter_clockwise")

temp_time = time.time()

while(time.time() - temp_time < 3):

screen.blit(wander1, wander1_rect)

pygame.display.flip()

forw("Left")

forw("Right")

time.sleep(0.2)

screen.blit(wander2, wander2_rect)

pygame.display.flip()

back("Left")

back("Right")

time.sleep(0.2)

#print("Forward")

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

stop_arms()

elif(status == 1):

#os.system('echo "' + str(distance) + ', left" | festival --tts')

two_wheel("Right_servo", "clockwise")

two_wheel("Left_servo", "clockwise")

temp_time = time.time()

while(time.time() - temp_time < 1.5):

screen.blit(wander1, wander1_rect)

pygame.display.flip()

forw("Left")

forw("Right")

time.sleep(0.2)

screen.blit(wander2, wander2_rect)

pygame.display.flip()

back("Left")

back("Right")

time.sleep(0.2)

#print("Left")

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

stop_arms()

elif(status == 2):

#os.system('echo "' + str(distance) + ', right" | festival --tts')

two_wheel("Right_servo", "counter_clockwise")

two_wheel("Left_servo", "counter_clockwise")

temp_time = time.time()

while(time.time() - temp_time < 1.5):

screen.blit(wander1, wander1_rect)

pygame.display.flip()

forw("Left")

forw("Right")

time.sleep(0.2)

screen.blit(wander2, wander2_rect)

pygame.display.flip()

back("Left")

back("Right")

time.sleep(0.2)

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

stop_arms()

#print("RIght")

else:

#os.system('echo "' + str(distance) + ', back" | festival --tts')

two_wheel("Right_servo", "counter_clockwise")

two_wheel("Left_servo", "clockwise")

temp_time = time.time()

while(time.time() - temp_time < 2):

screen.blit(wander1, wander1_rect)

pygame.display.flip()

forw("Left")

forw("Right")

time.sleep(0.2)

screen.blit(wander2, wander2_rect)

pygame.display.flip()

back("Left")

back("Right")

time.sleep(0.2)

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

stop_arms()

#print("back")

two_wheel("Right_servo", "stop")

two_wheel("Left_servo", "stop")

stop_arms()

pygame.mixer.music.stop()