Sustainable SmartRoom

ECE 5725

A Project By Rohit Krishnakumar (rk496) and Asad Marghoob (am2242).

Demonstration Video

Introduction

To implement this project we used a Raspberry Pi along with several sensors, a Pi-compatible microphone, and python scripts. We used two pressure sensors as a detection mechanism to keep track of people entering and leaving the room. The logic to keep track of the number of people in the room was done using callback routines in python and data structures to keep track of the order and times at which each sensor was triggered. We also had a photoresistor serve as a light sensor to detect sunlight and performed an API call to get the current weather and used both of these to read whether there is enough sunlight to illuminate the room. In order to open and close the blinds we connected a parallax Servo to the RPi GPIO pin and created model blinds that open and close as the servo rotates. Lastly, to provide the user with voice control we used a USB microphone connected to the Raspberry Pi to constantly record audio files, performed an API call on the audio file to a speech to text service, and parsed through the text returned to determine whether a command was given to change the state of the lights or the blinds. The current state of the room: number of people, blinds state, and lights state was also displayed on the PiTFT screen using pygame animation.

Project Objective:

Sustainability in 2018 has seen major strides, featuring novel efforts by entire countries and organizations towards large-scale renewable energy systems. However, we wanted to implement a product that stressed the issue of energy-saving at the personal and day-to-day level. Sustainable SmartRoom is a fully automated home device that aptly speaks to the idea of small but ultimately meaningful measures that we as individuals can all take towards saving our planet. Complete with lights/blinds control, insight into the current weather, and a user-oriented voice control element, Sustainable SmartRoom strongly encourages mindful steps in the right direction when it comes to sustainability.

Design

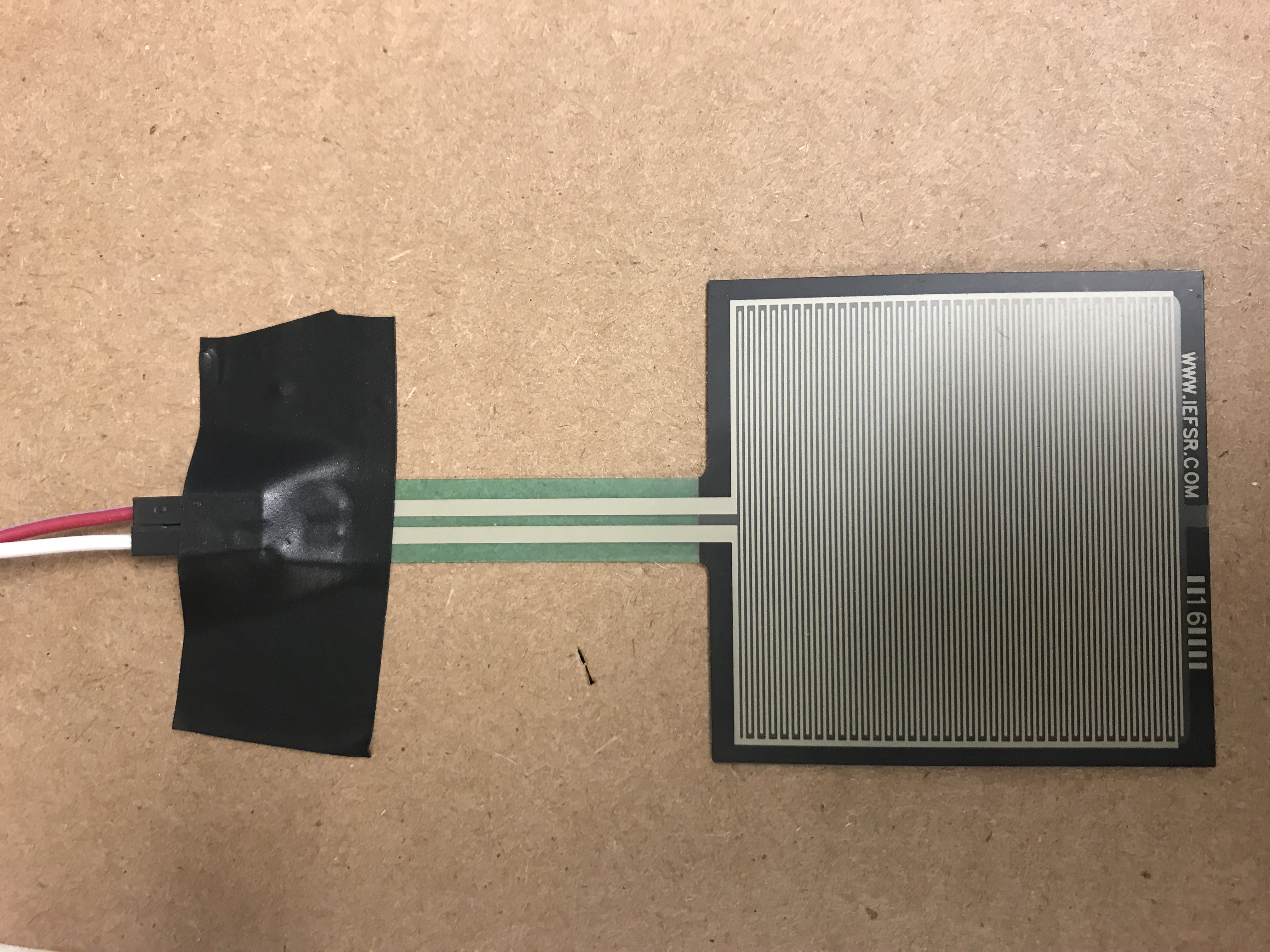

Pressure Sensors:

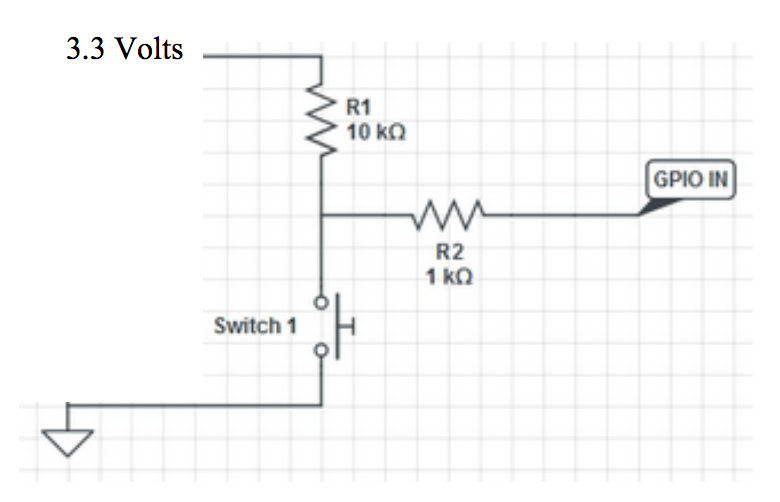

The first thing we tackled for this project was implementing the pressure sensors to keep a count of the number of people in the room. We first laid out the logic for detecting someone as entering or leaving the room such that if the second mat is triggered within three seconds of the first mat being triggered then someone has entered and vice versa for the exit case. We would keep a global counter variable that is updated upon entry or exit detection. We initially planned to use a polling system that constantly checks if either mat was triggered, however when we started writing the code we realized that a much more efficient and clean approach would be to use callback routines. The pressure mats were connected to GPIO pins and when triggered created a GPIO event. They were connected using the following circuitry:

Then we created two callback routines triggered on a GPIO event for each mat which each called a function for either mat.

Our initial approach for the callback functions was to have a while loop running for 3 seconds that would wait to see if the other mat was triggered within this time, as this would indicate either an entry or exit. However, we quickly realized that not only was using a while loop in a callback function very inefficient and troublesome but that if the one mat was triggered while the other was polling for it, the mats callback routine would take precedence over the logic inside the while loop thus making it impossible to accurately process entries and exits.

The solution we came up with for this was to create a global list structure for each mat that contains a boolean representing whether the mat had been triggered yet and an int to store the time when the mat had been triggered. We used the time library here. With this we were able to have our callback functions simply read the other mat’s list and compare that to the current time to see if entry or exit had occurred and then call an update state function. This made for a very short and quick callback function that would not interfere with the callback routines. Our update state function took in a parameter that told it whether someone was entering or exiting and it used this to update the global count variable of how many people were in the room.

Pressure sensor testing

To test that our pressure sensors and callback routines were working properly we had our callback functions simply print to the console so we could then interactively test the mats. Once we had fully implemented the callback functions and the update state function we tested this by hooking up LED’s to the Raspberry Pi to represent lights so that we could confirm that the lights were turned on or off as people entered and exited the room. To implement the lights we created a global lights_state variable and then inside the update state function whenever the number of people in the room was updated we checked if the lights state variable was correct and turned on or off the LED’s if necessary. It was important that we checked the state of the leds and only then updated as we knew that eventually we would be opening/closing blinds as well and we would not want to try and open blinds when they are already open. While we were testing our pressure sensors using the LED we noticed that the sensors were fairly unreliable and did not always register an entry or exit when they were supposed to. Eventually we realized that this was because our while loop at the end of the python script simply had “pass” inside. This was creating a running loop that interfered with the callback functions, so instead we introduced a delay into the loop and this instantly fixed our issue with the sensor mats. Once we had solidified our logic for the callback functions and fixed our while loop, the testing for the pressure sensors went smoothly.

Weather API Request

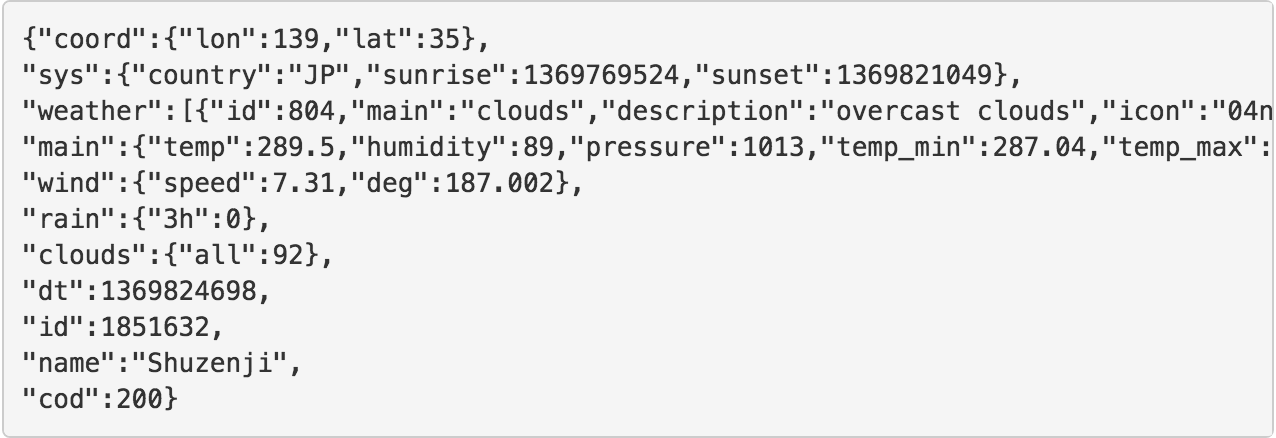

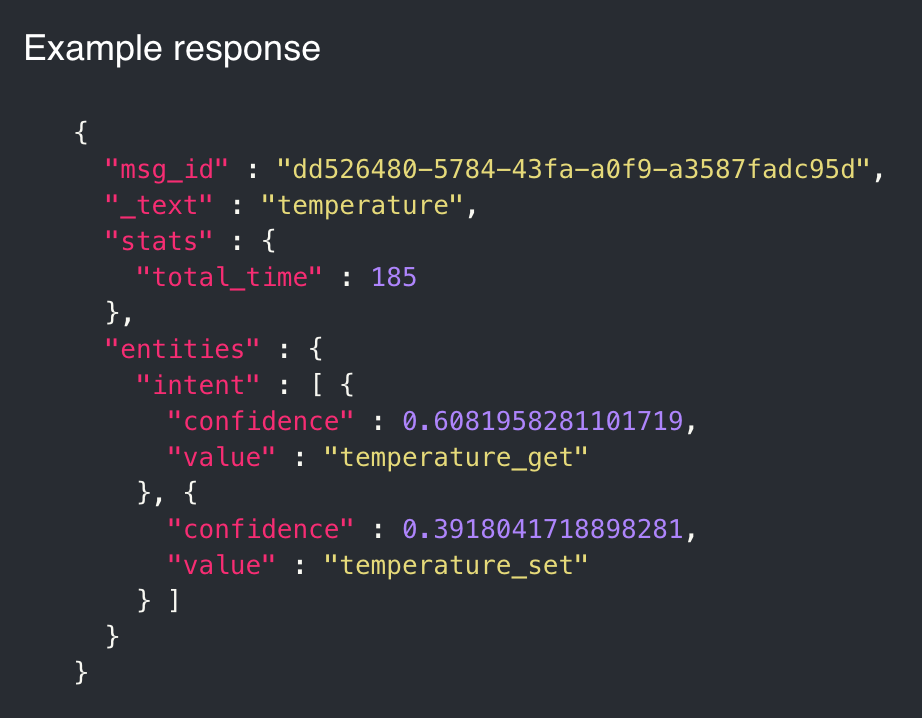

To gather information on the current weather we used the open source API, OpenWeatherMap. This service provides current weather information on any specified location and provided easy to use documentation on how to use the API. URLLIB. A major issue we ran into at this point was that even though the request I was making matched what the documentation said to do I was receiving an error. Upon research this error turned out to be an authentication issue. I further explored the OpenWeatherMap website and found that in order to make API calls a valid token was needed and so I had to create a free account on their website and then use the token given to me to make a request. After I added in this token to my API request it successfully gave back information. We hard-coded our location in the API request as Ithaca and received back a json formatted result which we parsed through to determine whether or not the weather was indicative of sunlight. The following is a snippet of an example json respone:

The data inside the json structure was given in ID codes which represented a different weather state (all the weather condition codes can be found at: https://openweathermap.org/weather-conditions). We decided that if the weather condition code was above 700 that this meant it was sunny enough outside and that this should result in a positive feedback when checking if there is enough light from outdoors. We packaged all of the code for doing an API call and parsing the json data into a function called get_weather which we used in a different function when checking whether there is enough sunlight from outdoors. This function was also made so that we do not make multiple API requests within 10 minutes of each other. This was done by saving the time of each request and if the function is called within 10 minutes of the last request then the old information is returned rather than making another request. This was recommended on the OpenWeatherMap site as the weather does not change very rapidly and making hundreds of API request every minute would be very inefficient.

Once we had the weather API call working we modified our existing logic from just having the lights turn on when someone is in the room to first checking whether there is sunlight from outdoors. To do this we created a light function which has a parameter representing whether the lights need to be turned on or off. If they need to be turned on then we first call the get_weather() function and then check if the ID codes represented a sunny day. If so then we called a placeholder function which would eventually open the blinds. If there wasn’t enough sunlight then we would simply resort to turning the lights on. This lights function was called from the update state function whenever there was no light in the room but light was needed.

The last piece that we incorporated from the weather API call was to check the current time and compare that to the sunrise and sunset times for that day which were given by the weather request. If at the time of entry or exit it was before sunrise or after sunset then it would automatically result in concluding that there is not enough sunlight.

Weather API testing

Most of the testng for the weather API call was done by simply making a call from the command line and viewing the output. Once we were successfully able to authenticate into the website, parsing through the json data proved to be fairly straightforward as it is a simple key value system. The final testing we performed for the weather API was testing the entire system. We tested to make sure that when the weather was nice outside that the placeholder blinds function was called when someone entered rather than the LEDs. We also tested that at night when it was after sunset, even if there had been good weather during the day that LED’s would turn on rather than the blinds opening. During testing for this, we also connected the blinds function to a servo as eventually this servo would connect to our demo blinds. This was simple to implement as the servo is controlled with a PWM through GPIO.

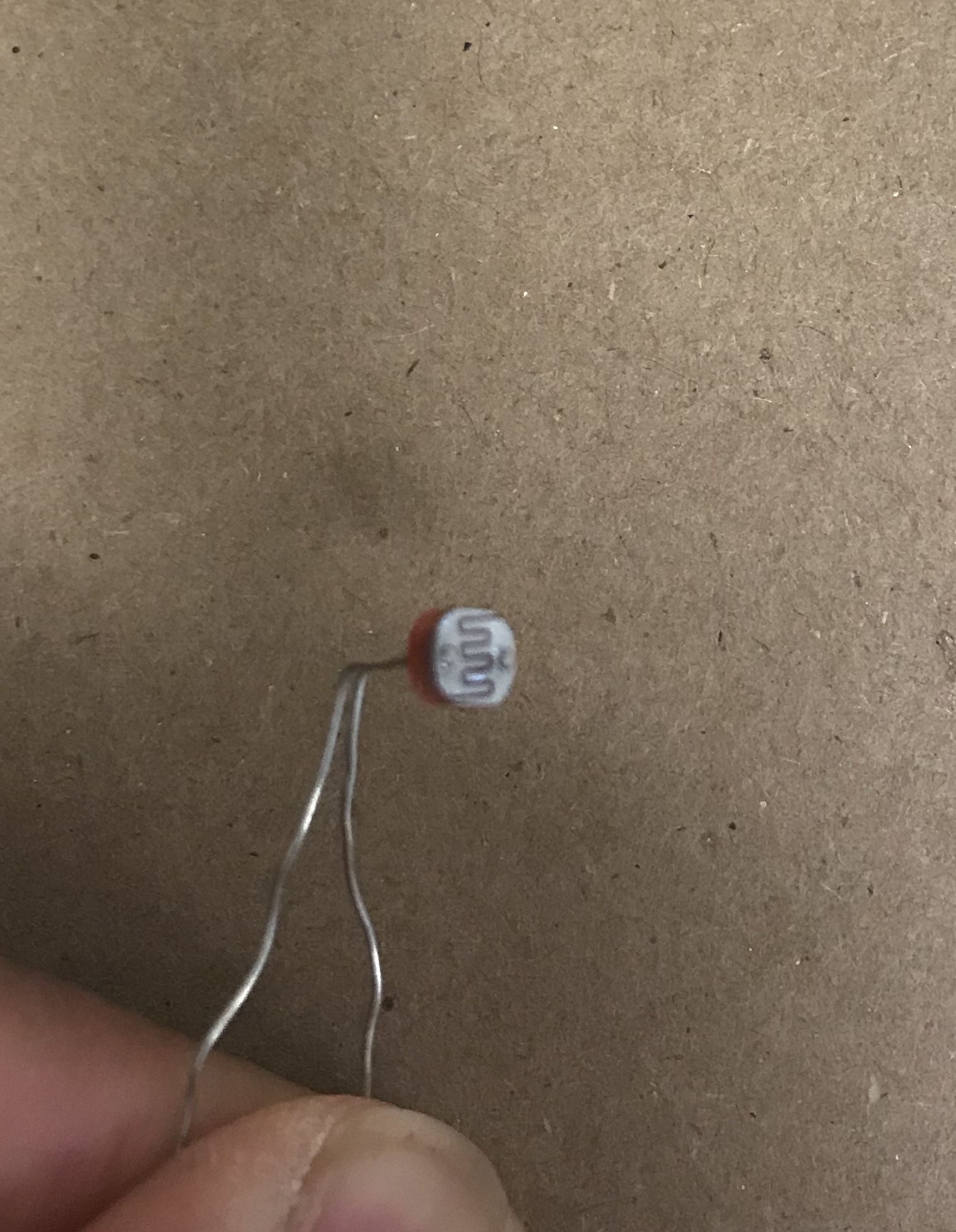

Photoresistor Sunlight Sensor

Upon entry of a person (with the current count displayed at zero), our system takes into account two conditionals before deciding if it is appropriate to open the blinds. Our weather API call, as delineated above, is one half of this query. Because we felt there may be edge cases, such as the weather in a given region/neighborhood not matching what the weather for the greater region or town. To this end, we wanted a physical input into our system that gave further insight into whether or not we should open the blinds upon entry of a person.

Upon entry of a person (with the current count displayed at zero), our system takes into account two conditionals before deciding if it is appropriate to open the blinds. Our weather API call, as delineated above, is one half of this query. Because we felt there may be edge cases, such as the weather in a given region/neighborhood not matching what the weather for the greater region or town. To this end, we wanted a physical input into our system that gave further insight into whether or not we should open the blinds upon entry of a person.

To accomplish this, we decided to use a single light dependent resistor (LDR) connected to the Pi through GPIO. We designed a simple voltage divider RC circuit, as we needed a method to measure intensity without having analogue pins in our breakout cable. A 1uF capacitor was placed in series with the LDR, with another wire being outputting from between the capacitor and LDR to our input pin. The circuit simulates accurate measuring of light intensity via tracking the amount of time it takes for the capacitor to charge. The input pin is initially set to GPIO.LOW, and upon the capacitor being charged to about 80%, the pin changes to GPIO.HIGH. We increment a variable called count to determine how long it takes for the capacitor to charge. If the count result is very low, then the light intensity is high.

When we initially implemented this function, we realized that every now and again there would be outliers in the data that do not exactly align with the true nature of the light intensity. Therefore (within our method for the sun sensor), we looped through five separate count iterations, appended them to a list, and took the average value. This accounted for any outliers that may have been otherwise skewing the data.

Photoresistor testing

To test this system, we made sure to see if the method could compile in a vacuum, i.e. in its own vim file without having to deal with the remaining logic. We did this by calling the function in a while loop, and printing out the count value after every half a second. When we found that this worked, put the function into our main program file and commented out the weather API to make sure that the only thing that would influence the decision upon entry were the blinds. Since we did not have the blinds implemented at this point, we simply had a print statement to the console that executed when the blinds were to be activated. We tailored the threshold value to be ~100, as this was the value such that if the count average was below, it indicated ample sunlight from outside or a flashlight beam from one of our phones. We also tested by covering the LDR to make sure that the “blinds open” statement would not run.

Finally, we incorporated this in in with the weather api. We hardcoded certain IDs for both favorable and unfavorable weather conditions to ensure that the logic checked out with the different settings for sensor input. We were pleased with how this part of the project turned out, as before we were unsure how to process analog sensor values.

Voice Control

After having solidified the entry and exit system as well as whether to open the blinds or turn the lights on, we moved onto integrating a voice control feature into the system. We used a USB microphone to record audio files and then performed a request to the Wit.ai API. Wit.ai is a free service that provides speech to text conversion. As with OpenWeatherMap, we had to create an account with Wit.ai to acquire a token for authentication. We then tested by creating an audio file and making a request to see if it accurately reads our file into text. The Wit.ai service was very accurate and almost perfectly recognized all of our spoken words. The following is an example response from the API call:

The main issue we ran into with the voice control was how to store the resulting data given from the API request into a variable that we could use in our python script. This was because the actual API call simply returned a 0 and by default printed the output to the console. The solution we eventually reached was to add in “-o output.txt” into the call and this redirected the returned information into a file. We then read through this file and performed string matching and splicing to get the particular text we wanted. With this string we performed an if else structure that checked for certain keywords in the string. The commands we looked for were as follows:

- Open Blinds

- Close Blinds

- Turn on Lights

- Turn off Lights

If the string we had contained say “open” and “blinds”, regardless of the other words that were spoken the blinds would open. This eases some of the rigidity of having specific commands. If one of the four commands was registered then the appropriate function was called to execute that command. We also made sure to update the lights state or blinds state variable accordingly so that the voice control would not interfere with the logic of the rest of the project.

Because we wanted to have the voice control work by simply speaking the command at any time, we created a polling system such that the microphone was always listening. We had the microphone listen for 3 seconds, create the audio file, run the API call, and once that computation was over, return to listening again. The other alternative we considered was having a button that when pressed would make the mic start listening, however we decided that for the smart room it would be more practical to have the mic always listening, similar to Amazon’s echo dot product. The issue we had with this was that the computation time for the API call was rather long and it would halt the mic from listening continuously. This meant that registering voice commands was not perfect as the command would have to be given within the time period that the mic was actually listening. We felt that this was okay as while testing it took no more than three attempts to get the timing correct and register a command.

Voice Control Testing

There were several phases of testing we did for the voice control feature. First, we confirmed that our USB microphone was working and that the audio files it created were reliable. Next, we printed out the results from the API call and checked to see that it was accurately registering our words. Once this was confirmed, we tested that parsing through the output.txt file was done correctly by printing the string to the console. Lastly, after we incorporated our commands and integrated it with the rest of the system we interactively tested that when we give our commands the system responds appropriately and updates the lights or blinds.

Blinds

As already alluded to in multiple parts of the lab, the blinds were one option for activation upon entry of a person into the room (the other being turning on the lights). Making the structure for the blinds was not a big consideration for the formative phases of our lab, as were of course more occupied with the brainstorming and programming our logic for the other modules of the lab first. Therefore, we did not make the actual structure until the rest of our code and demo was operating smoothly. Additionally, there were numerous considerations involved with the blinds that we wanted to iron out before going ahead and making the structure. What if the weather conditions changed (went from sunny to overcast) and the blinds were open? Should the blinds close if someone walks out of the room?

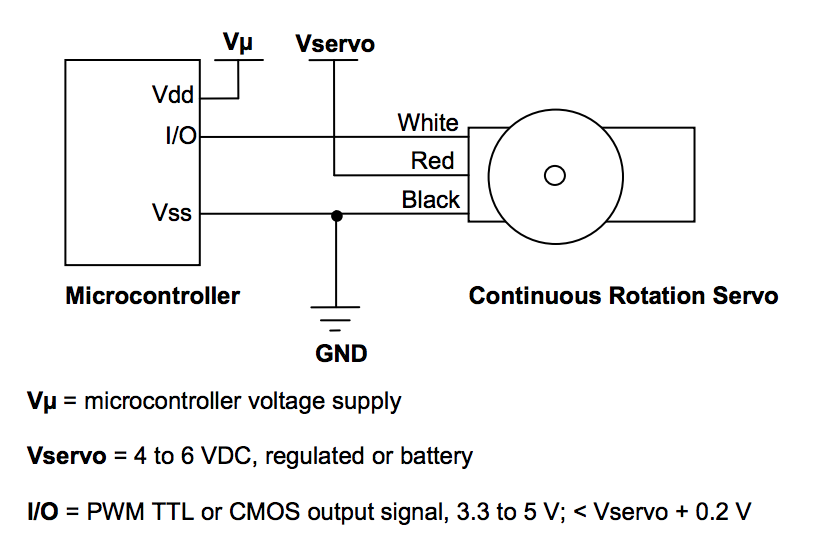

We decided that blinds would be controlled with a servo, and that we’d change the duty cycle appropriately depending on if the input was to open or close. The main blinds function took a boolean parameter, which told it whether or not the blinds should be open. We had multiple statements to handle this, such as making sure the system did nothing if the input was “open” (or True) and the blinds were already open. Similarly, we did not do anything with the blinds if the command was to close them and they were already closed. The way we controlled the blinds was simply by advancing or reversing the duty cycle with PWM based on the input to the function along with the current state. The way we made the blinds was with black tarp that we wrapped around a rod, that we then attached to our servo motor.

The Servo was wired using the following circuit diagram:

Blinds Testing

Testing for the blinds was relatively straightforward, as we simply ran through many iterations of entry and exit with differing conditions to see if the blinds opened when they were supposed to. We also wanted the feature to work on a slightly more complex level; if someone were to walk into a room when the weather changed from sunny to not-so, it would make sense for the blinds to close and the lights to turn on simultaneously. This was something we retroactively realized during testing and made sure to address after we had implemented the general functionality. Testing also involved making sure our adjustments to the duty cycle were sound, as we did not want the blinds to be turned too much in either direction.

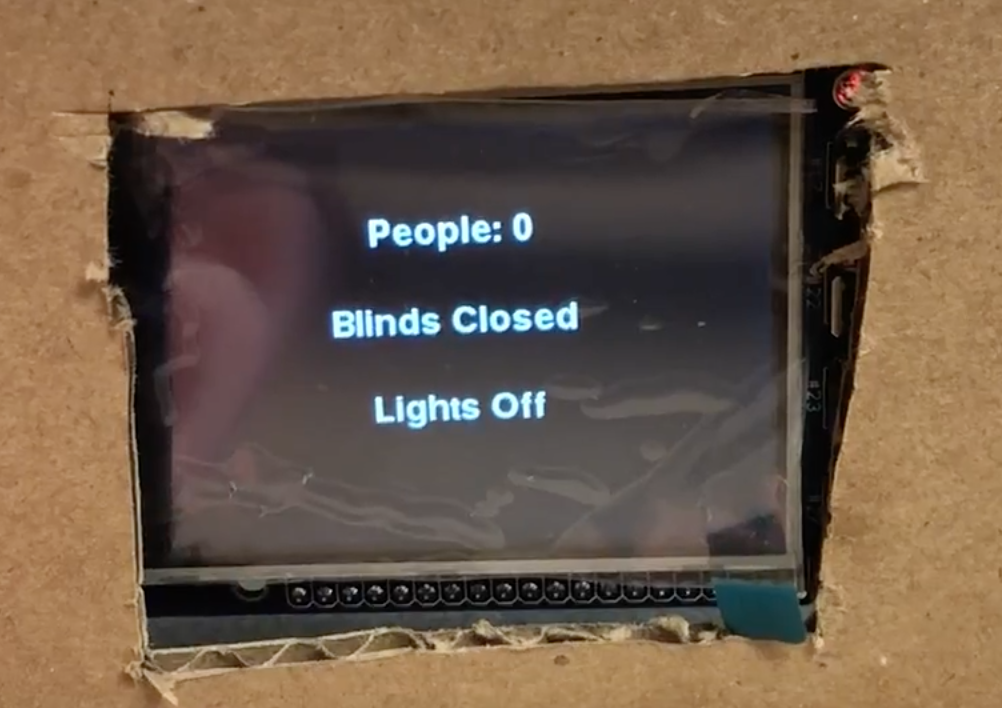

PiTFT State Display

When we started off debugging, we simply had print statements tell us if our logic was flowing the way we intended it to. However, in the second or third week of the project, we thought that debugging could be made easier if we had a display that always showed the current state of the blinds, lights, and how many people were in the room. Eventually, we decided it would be a good idea to incorporate this into our demo by having the Pi-TFT display these states in the room. Similar to our previous labs in class, we displayed the states by having a dictionary with the three entries we wanted, and iterated through them to show them (at first) on the monitor. Each key was the text of interest, with the key’s value being the position we wanted to display it. We first did this in its own file to see if our method of using a dictionary would work on its own merits. We then weaved it into our main code using the pygame library from lab with a method called fill_screen(). Every time a state was changed in any part of the other code, we include a call to this function. Our next course of action was having these states display on the TFT screen as opposed to the monitor. For this, we needed to insert the pertinent os.putenv() commands and set the pygame mouse to invisible.

PiTFT Testing

For testing the display, we made sure the screen was updating correctly with every possible permutation of entry, exit, prior state, and weather conditions. We ran into an obstacle at the beginning where the screen was not refreshing at all, regardless of how the rest of our code was operating. We quickly realized that this was because we forgot to put the pygame.display.flip() at the end of our function. Additionally, we were having a lot of trouble with getting the states to show up at all on the TFT; no matter what we did with the code, it seemed that the states could only be displayed on the monitor. There were two issues here that we eventually debugged. After looking back at previous labs that first leveraged the TFT, we saw that there was a small typo in one of the config files that dealt with frame-rate. The second caveat was that we initialized our pygame library before the os.putenv() statements, which precluded any sort of display on the screen. We also implemented a bailout quit button on one of the GPIO pins that was connected to the TFT to easily facilitate multiple tests.

Drawings and Pictures

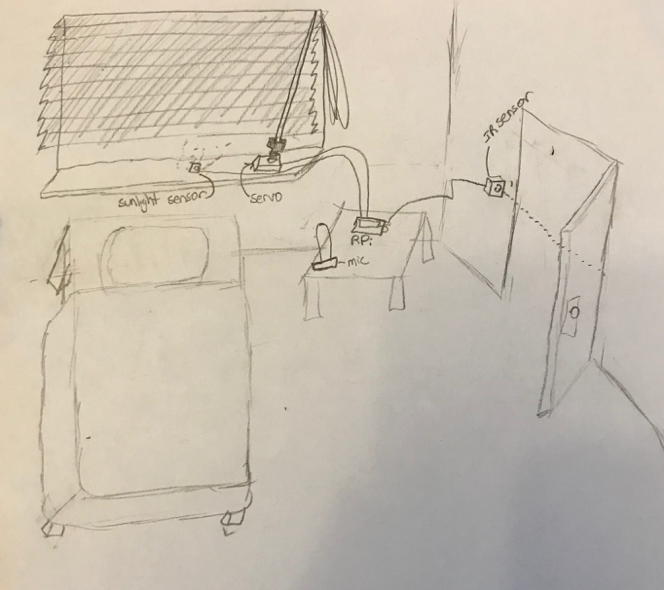

This was our initial drawing of the system for our proposal.

You can see the servo for controlling blinds, a sunlight sensor for reading sunlight, a microphone for voice control, and the Raspberry Pi connected to all of these. These are all features we ended up sticking with and successfully implementing. You can also see an IR sensor on the door. Our initial plan was to use an IR sensor for detecting people entering and exiting the room but we changed that early on to pressure sensors.

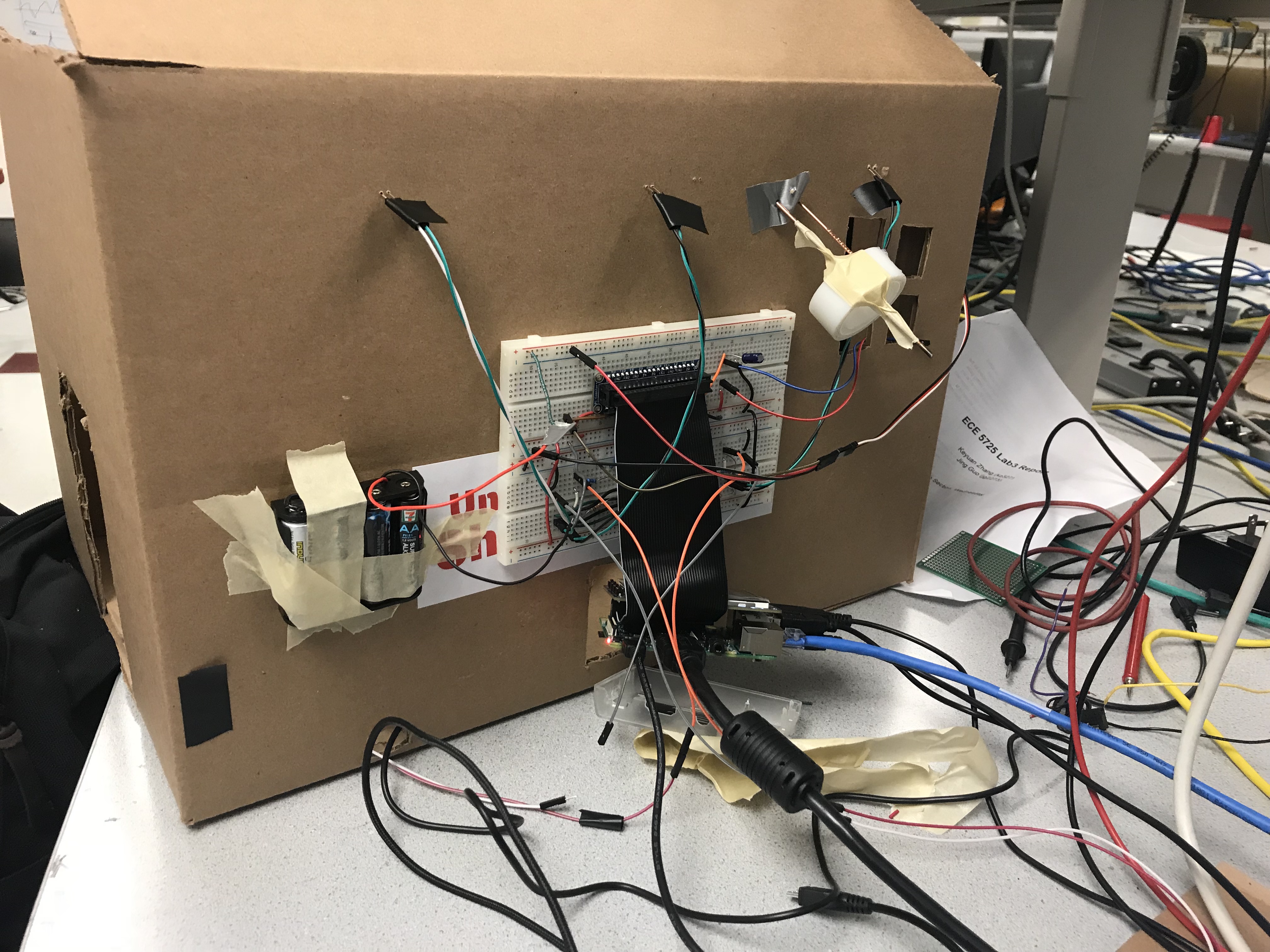

Circuitry/wiring

On our final demo we attached the raspberry pi, the battery pack for the servo, and most of the circuitry/wiring behind the back of our box.

Testing

Due to the nature of our project, most of our testing was very interactive with us testing out features as we implemented them. Our testing process was very modular with us implementing a feature and then testing it immediately. Our testing of each component of the project is described in our Design section after each component as we felt that structuring our design section this way more accurately described our design/testing process

Results

Ultimately, everything performed as planned with only a few minor drawbacks and compromises. We were able to get the pressure sensors operating extremely smoothly, the query for weather API with physical light readings always give accurate data, and the lights/blinds always responded according to the actual circumstances.

One example of a drawback was our polling for voice input. As explained in the design section on the voice control, there is a reasonable amount of overhead with regards to the wit.ai module processing and making the file with the user’s spoken words. As a result, there is a limited time for which the user can say something that will be viably interpreted by the system and put into its own file. The user must say the desired command fully and clearly within the 3 second window that the microphone is listening. However, repeating the the command around an average of 2 times triggers the right command.

One more aspect that could use a little bit of polishing is our blinds. We did not exactly standardize an amount of rotation that would suffice for closing and opening with the size of the rod and tarp in mind. Because of this, the opening and closing of the blinds is not always consistent; in other words, an “open” set of blinds can have multiple positions associated with it that could all fall under the general label of being open. This works similarly for closed blinds.

Conclusion

Overall, the system achieved the vast majority of the results that we had outlined from the start. We found it particularly rewarding that so many of features that we brainstormed in the early phases of our project were able to be realized and smoothly implemented into a cohesive system. Since we had a largely modular project, it was feasible to project multiple things from the start that we could work on separately and then eventually integrate them piece by piece.

We ended up with a TFT display for number of people in the room, as well as the states for blinds and lights, a microphone-interface for voice control, sun sensor readings, weather API calls, and pressure sensor detection for entry and exit. Using the above features, this system makes for a fully automated sustainable room environment that still account for user override based on his or her preference.

The only major component that we did not see through was the user interface smartphone app. Setting up the Pi as a Wifi access point to add in more user control seemed to be a little bit redundant, as we had already realized this use case in that we had voice control commands already fully operational and gave the user complete autonomy.

Future Work

If we had more time to continue working on this smart room project one of the first features we would expand upon would be the voice control. With the structure we have in place it would be very easy to add in new commands and link them to perform some function.

Alarm System and Blinds

One of the voice command features we would implement would be an alarm system which opens the blinds prior to the alarm going off so that the user can wake up to a well lit room. This alarm would be set using the voice control by specifying a time. We would implement this by first adding in a speaker to the smart room so that an alarm can be played. We would then need to add in another command to the voice control that would allow the user to specify a time for the alarm. Then we would need a function that stores this alarm time and checks every minute or so to see if the current time matches this alarm time. If the alarm time is met then we would output an alarm sound to the speakers until a button is pressed indicating the user has woken up. It will also check if it is within 10 minutes of the alarm and if so it will open up the blinds so that the user can be primed to wake up soon and so that they can wake up to a well lit room. This is helpful as many people find it easier to wake up and start the day if their room is well lit, as compared to waking up in a dark room and still feeling sleepy.

Music Playback

Another voice command we could add in would be playing music. This would utilize the same speaker as the alarm and we would have to use a music library of some sort to search and play songs. This would be a very practical feature as many people enjoy listening to music in their room and this would make that very easy.

Smartphone App Remote Control

Beyond just voice control, we would also like to implement a smart phone app that allows you to control the room remotely. This was a feature we originally had considered for our project but decided to use voice control instead as having both an app and smartphone seemed redundant. However, if we were able to use the application from any location rather than just in the room this would be useful and and a challenging feature to implement.

Adjustable Blinds Attachment

Lastly, if we had more time we would have liked to create an adjustable servo attachment that links onto any blinds setup. We would CAD a design for this that fits into the parallax Servo and has an adjustable end to fit onto the rod of a blinds set up. We could 3-D print this and incorporate it into our project.

Work Distribution

Demo Day Picture

Rohit

rk496@cornell.edu

Designed the pressure sensor callback routine logic. Implemented the voice control feature. Built the blinds model with servo. Worked on circuitry and building the demo.

Asad

am2242@cornell.edu

Designed the LDR sensor logic and circuit. Worked on the PiTFT display. Implemented the weather api functionality. Worked on circuitry and building the demo.

Acknowledgements

We would like to give a huge thanks to Professor Skovira and the TAs for their invaluable help; this project could not have come together if not for their guidance and expertise. We would also like to thank the ECE department and Cornell University for this opportunity and the vast resources they provide that allowed us to realize this project.

Parts List

- Raspberry Pi $35.00

- PiTFT Screen $35.00

- Pressure Sensors - $15.90

- LDR Photoresistors - $6.88

- Servo - Provided in lab

- Blinds material - Provided in lab

- USB Microphone - Provided in lab

- LEDs, Resistors and Wires - Provided in lab

Total: $92.78

References

Code Appendix

#Final Project: pad_test.py

#Authors: Rohit Krishnakumar and Asad Marghoob

#rk496,am2242

import RPi.GPIO as GPIO

import time

import os

import urllib2, json

import pygame

import requests

GPIO.setmode(GPIO.BCM)

#pygame.mouse.set_visible(True)

os.putenv('SDL_VIDEODRIVER', 'fbcon')

os.putenv('SDL_FBDEV', '/dev/fb1')

os.putenv('SDL_MOUSEDRV', 'TSLIB')

os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen')

pygame.init()

pygame.mouse.set_visible(False)

WHITE=255,255,255

BLACK=0,0,0

screen=pygame.display.set_mode((320,240))

#set two pins to read pressure mat input

mat_A = 4

mat_B = 19

#initialize states

lights_state = False

blinds_state = False

sensor = 22

GPIO.setup(mat_A, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(mat_B, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(27, GPIO.IN, pull_up_down=GPIO.PUD_UP)

LED_pin = 5

GPIO.setup(LED_pin, GPIO.OUT)

matA_trigger = [False, 0]

matB_trigger = [False, 0]

people=0

weather_time = 0

weather=[]

#initialize PWM for servo (blinds) control

GPIO.setup(17,GPIO.OUT)

p=GPIO.PWM(17,50)

p.start(0)

my_font = pygame.font.Font(None, 28)

def vc():

os.system('arecord -D plughw:1,0 -d 3 test.wav ')

def fill_screen():

"""

Thsi function updates the TFT screen

with current states, i.e.

# people

blinds_state

lights_state

"""

global people

global lights_state

global blinds_state

if (lights_state):

lights = "On"

else:

lights="Off"

if (blinds_state):

blinds="Open"

else:

blinds= "Closed"

#dict of display entries we are interested in:

my_displays = {('People: '+str(people)):(160, 60),('Blinds '+str(blinds)):(160,110),('Lights '+str(lights)):(160,160)}

screen.fill(BLACK)

for my_text, text_pos in my_displays.items():

text_surface = my_font.render(my_text, True, WHITE)

rect=text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

def A_react(channel):

"""

someone has stepped on the entry mat,

triggering this callback routine

"""

global matA_trigger

global matB_trigger

global people

print(people)

print("A_react" )

if (matB_trigger[0] and (time.time() - matB_trigger[1] <1.5)):

matB_trigger[1]=matB_trigger[1]-3

#someone entered has exited the room

update_counter(False)

else:

print("Entering?")

matA_trigger = [True, time.time()]

def B_react(channel):

"""

someone has stepped on the exit mat,

triggering this callback routine

"""

global matA_trigger

global matB_trigger

global people

print(people)

print("B_react")

if (matA_trigger[0] and (time.time() - matA_trigger[1] <1.5)):

matA_trigger[1] = matA_trigger[1]-3

#Someone entered has entered the room

update_counter(True)

else:

print("Exiting?")

matB_trigger = [True, time.time()]

def getWeather():

"""

This function fetches and loads the API data for

further use

"""

global weather_time

global weather

if(time.time() - weather_time > 600):

weather= urllib2.urlopen("http://api.openweathermap.org/data/2.5/weather?q=Ithaca,us&APPID=3677a30373be43afd31db572087b9eb7").read()

weather=json.loads(weather)

weather_time=time.time()

return True

else:

return False

def blinds(val):

"""

moves blinds based off of boolean value,

does nothing if value is already in line

with state of the blinds

"""

global blinds_state

if val:

if (not blinds_state):

#open blinds function

p.ChangeDutyCycle(10)

tr = time.time()

while(time.time()-tr <5):

time.sleep(.5)

p.ChangeDutyCycle(0)

blinds_state=True

fill_screen()

return 0

else:

if(blinds_state):

#close blinds function

p.ChangeDutyCycle(3)

tr=time.time()

while(time.time() - tr <5):

time.sleep(.5)

p.ChangeDutyCycle(0)

blinds_state=False

fill_screen()

return 1

def sun_sensor():

"""

This function checks whether or not the

detected light intensity is enough to open

the blinds upon person(s) entry.

"""

count_list = []

for x in range(5):

GPIO.setup(sensor, GPIO.OUT)

GPIO.output(sensor, GPIO.LOW)

time.sleep(.1)

GPIO.setup(sensor, GPIO.IN)

count = 0

#check to see how long capacitor took to charge

while(GPIO.input(sensor) == GPIO.LOW):

count += 1

#add duration to count_list

count_list.append(count)

total = sum(count_list)

#check average value of counts

if ((total/5) < 100):

print("Sun sensed")

return True

else:

print("Sun not sensed")

return False

def lights_all(val):

"""

performs the main query for entry of first person,

weighing sensor data and weather API to decide between

blinds or lights

"""

global lights_state

global weather

print("val:"+ str( val))

if(val):

print((str( getWeather())))

print("here")

#check for affirmative between both sensor data and API data

if(sun_sensor() and weather['weather'][0]['id'] >= 700 and weather['sys']['sunrise']<=time.time() and weather['sys']['sunset']>time.time()):

print("blinds")

blinds(True)

#close blinds and turn on lights

else:

print("leds")

GPIO.output(LED_pin, GPIO.HIGH)

lights_state=True

blinds(False)

fill_screen()

else:

GPIO.output(LED_pin, GPIO.LOW)

blinds(False)

lights_state=False

fill_screen()

def leds(val):

"""

updates lights based off of

voice control reading

"""

global lights_state

if (val and (lights_state is False)):

print("turning lights on")

GPIO.output(LED_pin, GPIO.HIGH)

lights_state = True

fill_screen()

elif ((not val) and (lights_state)):

print ("turning lights off")

GPIO.output(LED_pin, GPIO.LOW)

lights_state = False

fill_screen()

def update_counter(a):

"""

upon each complete entry/exit, this function updates

# people and triggers the commands for updating lights

and blinds

"""

global people

global lights_state

if(a):

people=people+1

else:

if(people>0):

people=people-1

fill_screen()

#check if there are people in the room

if(people>0 and (not lights_state)):

print("need light")

#turn on lights or open blind

lights_all(True)

#

elif((people is 0) and lights_state):

print("need no light")

#turn off lights

lights_all(False)

#interrupt callback init

GPIO.add_event_detect(mat_A, GPIO.FALLING, callback=A_react)

GPIO.add_event_detect(mat_B, GPIO.FALLING, callback=B_react)

fill_screen()

bx=True

while(bx):

time.sleep(3)

#bailout button

if (not GPIO.input(27)):

bx=False

vc()

a = (os.system("curl -XPOST 'https://api.wit.ai/speech' -i -L -H 'Authorization: Bearer 32TXXDZXGR74UVFCLOJJPU7PQH7RFUF4' -H 'Content-Type: audio/wav' --data-binary '@test.wav' -o out.txt"))

with open('out.txt', 'r') as myfile:

data=myfile.read()

start = data.find("text")+9

end = data.find('"', start )

final = data[start:end]

print (final)

if(start > 0):

if((final.find("lights") >= 0 or final.find("light") >= 0) and final.find("turn") >=0 and final.find("on")>=0):

#turn lights on

leds(True)

elif ((final.find("lights") >=0 or final.find("light") >= 0) and final.find("turn")>=0 and final.find("off")>=0):

#turn lights off

leds(False)

elif((final.find("blinds")>=0 or final.find("blind")>=0) and final.find("open")>=0):

#open blinds

blinds(True)

elif((final.find("blinds")>=0 or final.find("blind")>=0) and final.find("close")>=0):

#close blinds

blinds(False)

#remove file to prepare for more voice control

os.remove('out.txt')